Five Things You Should Know Before Reading:

-

CES 2026 is about platform reinforcement, not new silicon

-

AMD is standardizing AI across the stack, not selling it as a premium

-

Ryzen AI 400 is the real volume story

-

Desktop and GPU updates are maintenance moves

-

Software is where AMD is actually advancing

Please note we’re splitting up AMD Client and AMD Enterprise announcements, just because we realise some of the audience only focuses on one or the other.

For CES 2026, AMD has lined up a number of announcements across its client portfolio, spanning desktop, mobile, graphics, software, and embedded. The unifying theme isn’t a new CPU architecture or a new platform, but a refresh with tuned knobs, wider coverage, and more segmentation designed to keep the current stack relevant as the calendar flips – despite the dynamics of the supply chain start tilting away from the consumer.

AMD is using CES to re-stack its lineup around the AI Everywhere narrative, positioning Ryzen AI as the default engine across the portfolio rather than a premium tier bolted on at the top. The goal is streamlining: modest hardware lifts, certification box-ticking, and pushing AI capability into lower-priced systems so OEMs can ship volume designs that meet Microsoft’s requirements. It generates noise, but it also signals that this cycle is about reinforcing AMD’s existing platform rather than resetting it.

The timing of these announcements also reflects where the PC market sits today. The initial “AI PC” shock-and-awe phase has passed, and the market has moved into a procurement and refresh cycle where buyers, OEMs, and Microsoft care less about halo products and more about consistent qualification across price bands. That dynamic incentivizes platform continuity over betting a cycle on a single architectural headline.

AMD’s announcements also come across like a defensive move against Intel’s ability to flood OEM designs with tightly segmented SKUs. Right now we’re writing this ahead of CES as part of a news embargo, without any knowledge of what Intel is going to bring to the market at the show, but AMD’s answer here is to ensure it has a comparable ladder, keeping its products and the Zen 5 microarchitecture relevant in the minds of consumers.

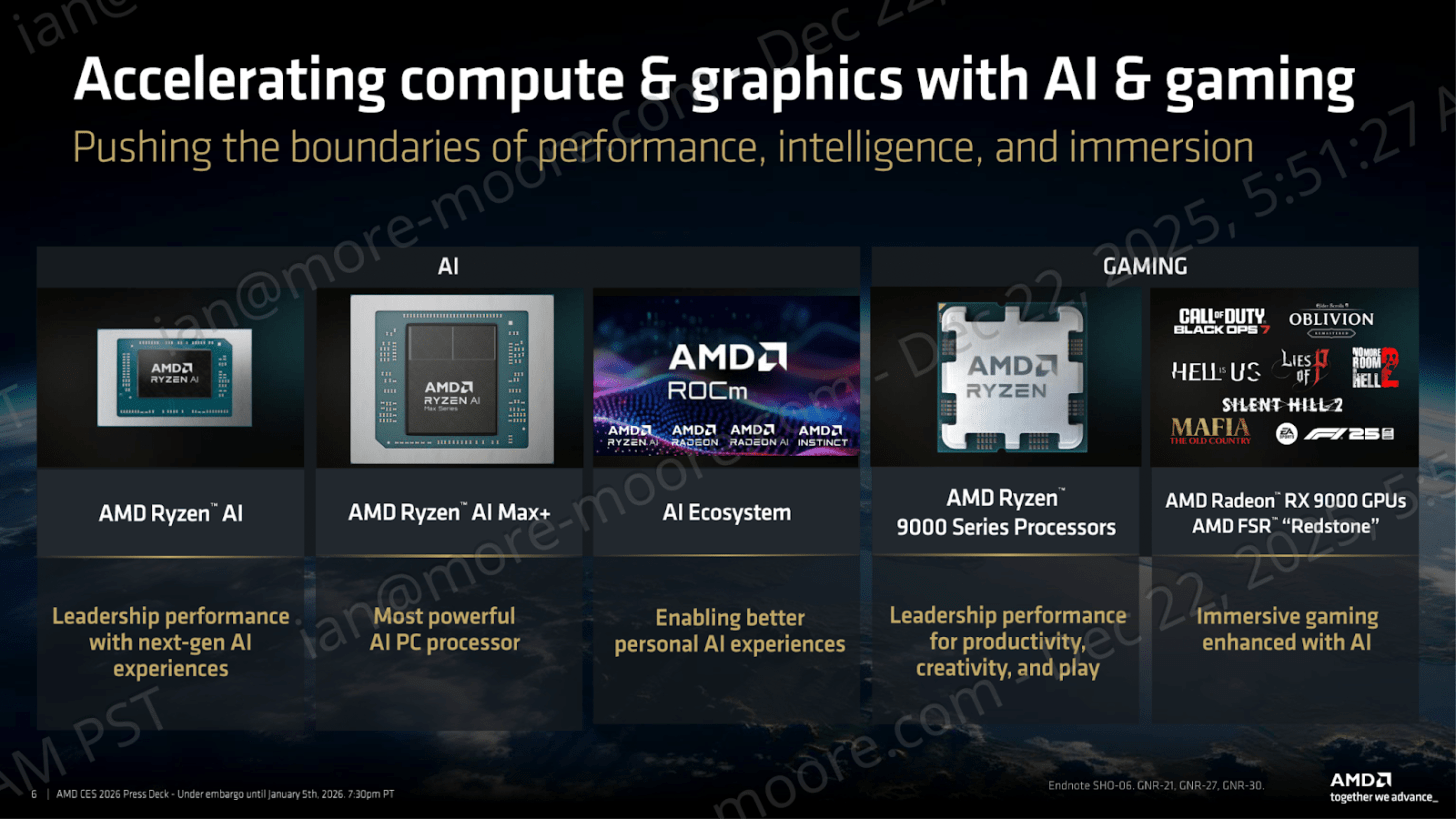

This matters because a mid-cycle refresh such as this one often aims to improve price to performance on a now-mature platform. When the architecture remains broadly the same, and the platform story is similar, the differentiators come down to competitiveness. All in all, it signals whether its ‘AI PC’ positioning translates into the systems people can actually buy in volume. That being said, AMD’s CES 2026 announcements are split into the following segments:

-

Mobile: AMD Ryzen AI 400 Series for Consumer, AI PRO 400 for Enterprise

-

Halo Mobile/AI Development: AMD Ryzen AI Max+ Workstation

-

Desktop: Ryzen 9000 series with more X3D coverage

-

Graphics: Radeon RX 9000 series and FSR “Redstone”

-

Software: ROCm

-

Embedded: Ryzen AI Embedded P100 APUs for industrial and automotive

New AMD Ryzen AI 400 For Notebooks

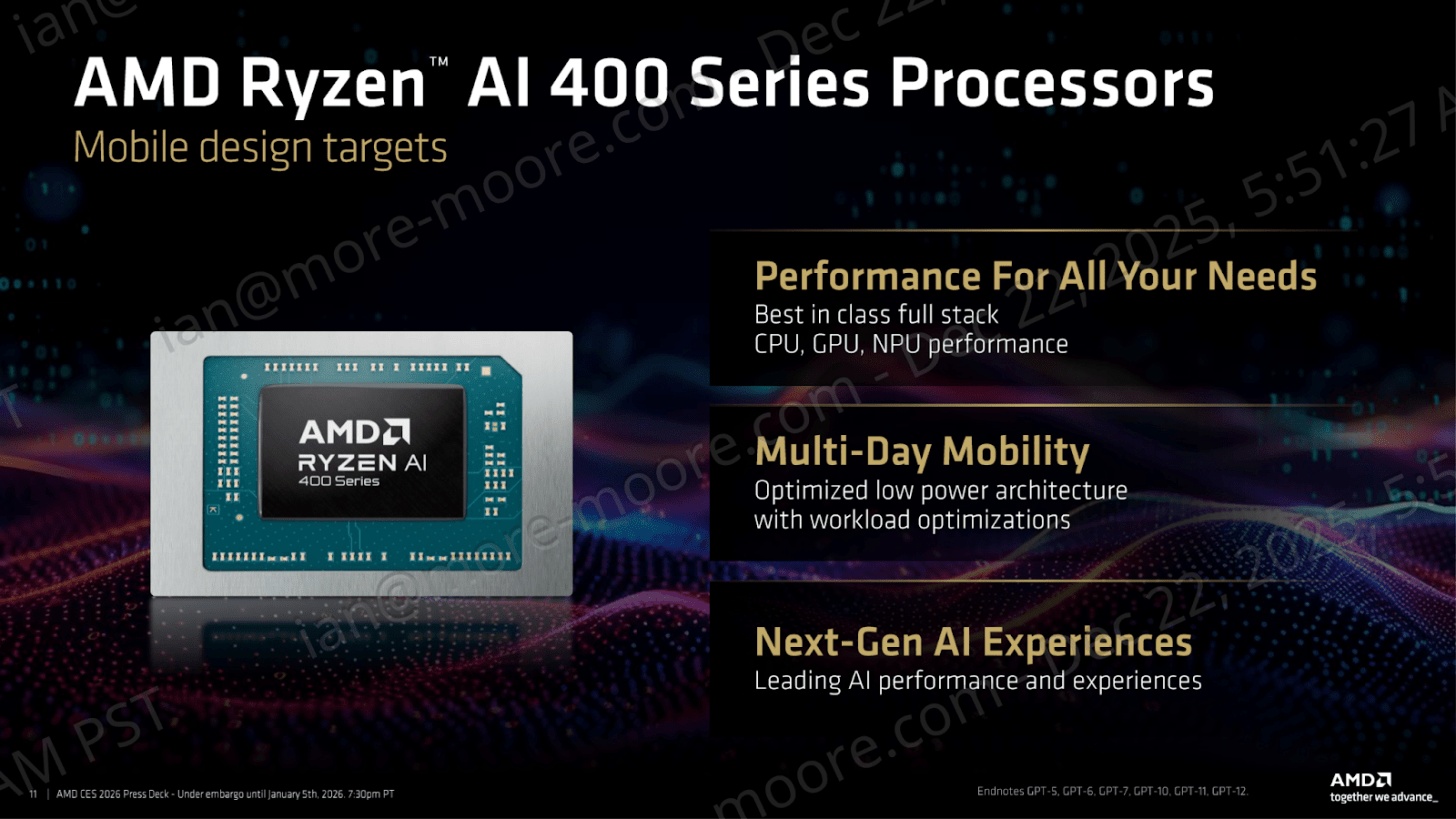

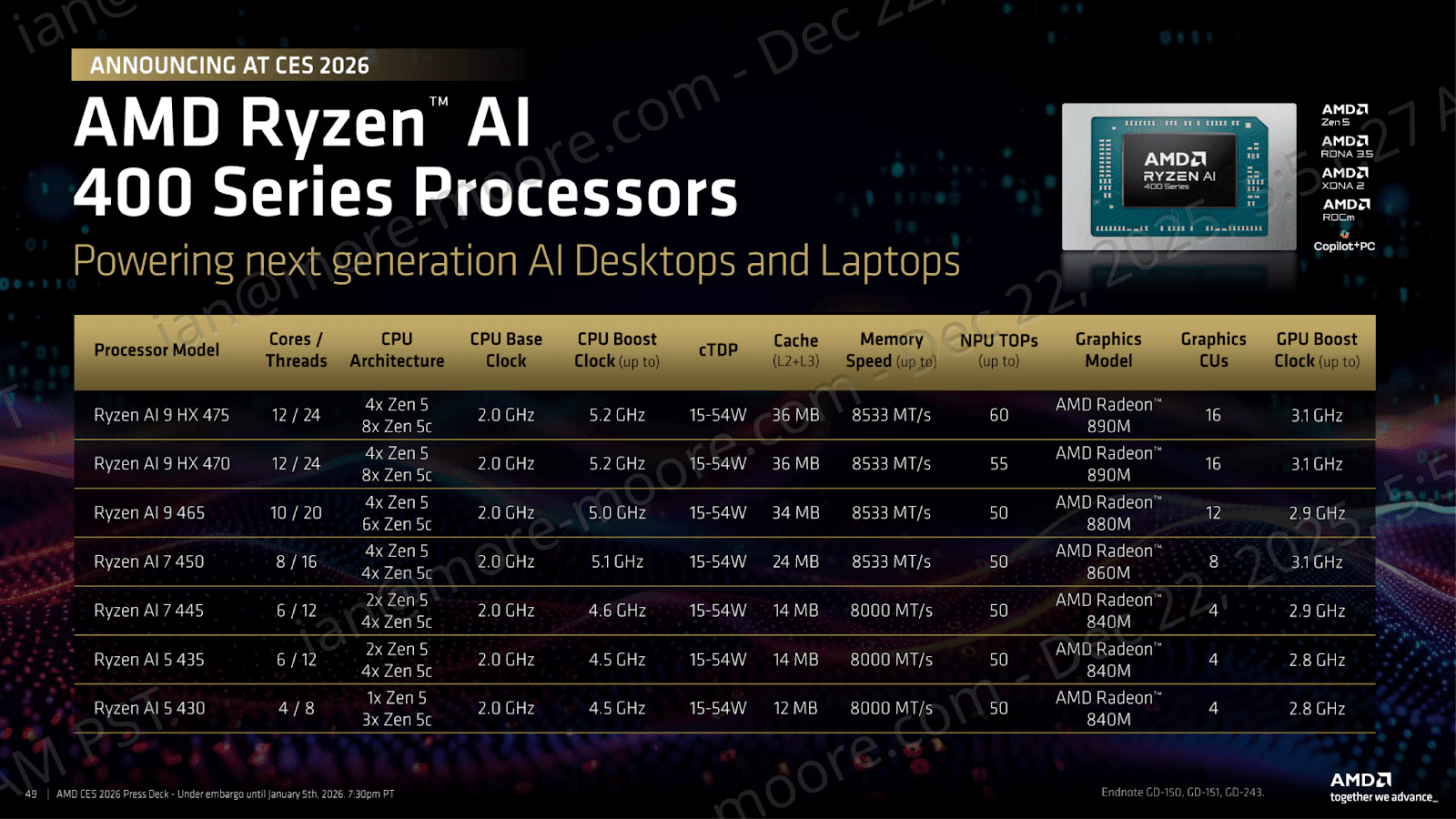

AMD’s Ryzen AI 400 series is perhaps the centerpiece (and bulk) of its CES 2026 client messaging, as it’s the part of the announced stacks that is expected to translate to real OEM volume. AMD is pitching the updated Ryzen AI 400 series as the Copilot+ workhorse for laptops, and it ties within AMD’s message that the NPU, built on the XDNA2 architecture, is now the default AI PC engine, rather than being sold as a premium add-on tier feature. The framing on availability also ties in with the theme of this being a mid-cycle refresh designed to keep the platform loud as the calendar advances another year, and not a long-lead platform reset; Ryzen AI 400 series is set for launch in Q1 2026, which is expected, given the lack of a new platform and embracing the existing one.

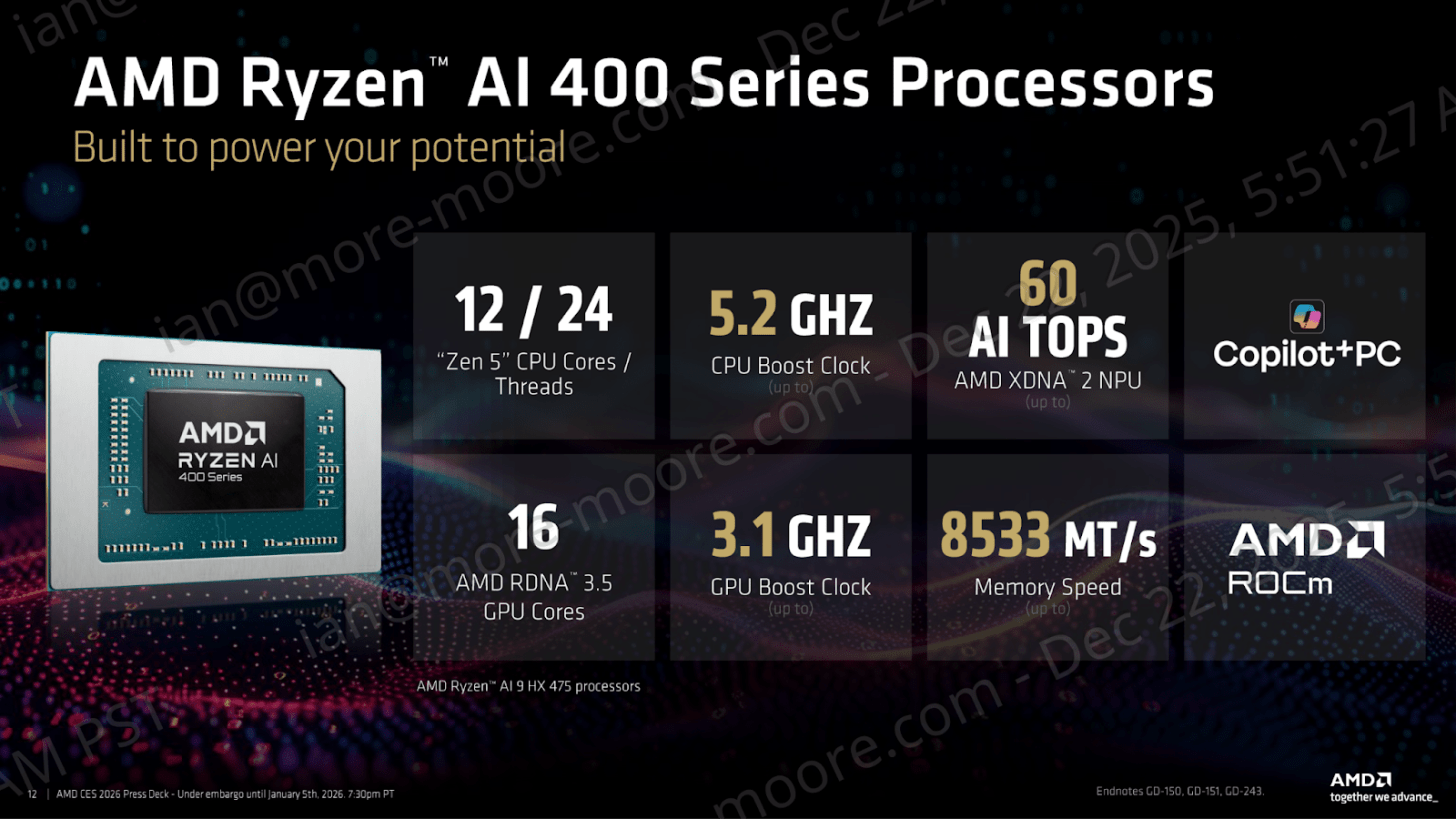

This, at best, makes the AMD Ryzen AI 400 a direct refresh of the existing 300 series, with improved TOPS performance, better and faster memory support, and likely better binned silicon used throughout. A key takeaway of the overall improvements across the Ryzen AI 400 platform includes a 9% boost to TOPS performance at the top of the stack from 55 TOPS to 60 TOPS. On top of this, AMD has improved the ceiling on memory performance with support for up to LPDDR5-8533 on its mid-stack and higher-end SKUs, with LPDDR5-8000 now being the platform’s baseline. The argument here being that as users (and software) will run bigger models or want higher performance, memory bandwidth is now a premium player even in the mid-tier. Overall though, AMD is using the same silicon as before, just with better binning, combining its Zen 5 CPUs with RDNA 3.5 graphics.

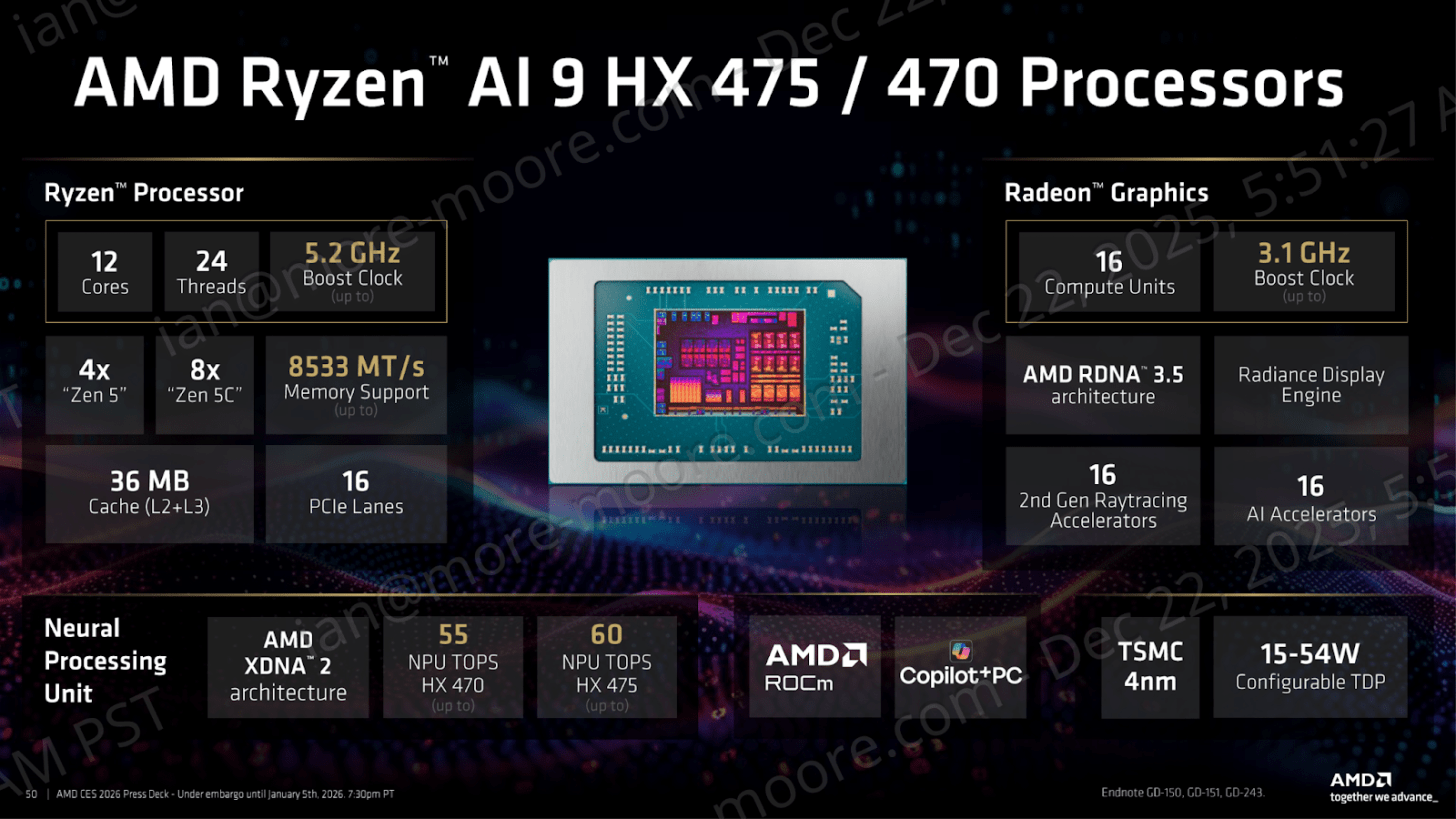

Acting as the halo is a pair of HX SKUs, the Ryzen AI 9 HX 470 and Ryzen AI 9 HX 475.

Comparing it over the previous generation:

-

Ryzen AI 9 HX 375:

-

12 cores (4 Zen 5 + 8 Zen 5c)

-

2.0 GHz base, 5.1 GHz boost

-

15-54W cTDP, 28W default

-

LPDDR5X-8000

-

55 NPU TOPs

-

AMD Radeon 890M with 16 CUs at 2.9 GHz Boost

-

-

Ryzen AI 9 HX 475 *New*

-

12 cores (4 Zen 5 + 8 Zen 5c) no change

-

2.0 GHz base, 5.2 GHz boost +100 MHz on boost

-

15-54W cTDP, 28 W default no change

-

LPDDR5X-8533 bin increase

-

60 NPU TOPs up +9%

-

AMD Radeon 890M with 16 CUs at 3.1 GHz boost +200MHz on boost

-

If you can get over the naming, the memory and NPU bump is the main focus.

Ryzen AI 400 Core Counts Deciphered

Full and Compact Cores Combined

As with previous generations, AMD is using an amalgamation of its full-sized cores and its more compact cores to create core counts, which can be confusing to consumers when looking at overall core counts. Both cores are functionally identical, however the compact cores are designed with half of the L3 cache per core and up to a maximum frequency – something that in physical design, if you put a frequency limit, can assist with density.

For the parts listed above, the Ryzen AI 5 430 is a 4C/8T part, with one full Zen 5 core and three Zen 5c cores. For the Ryzen AI 400 generation, the rule of thumb is consistent throughout the stack.

-

4C/8T SKU = 1 x Zen 5 + 3 Zen 5c

-

6C/12T SKU = 2 x Zen 5 + 4 x Zen 5c

-

8C/16C SKU = 4 x Zen 5 + 4 x Zen 5c

-

12C/24T SKU = 4 x Zen 5 + 8 x Zen 5c

Across the Ryzen AI 400 series (excluding HX), AMD is introducing five SKUs that largely retain the characteristics of their previous-generation counterparts. The entry-level Ryzen AI 5 430, for example, remains a 4C/8T part with up to 4.5 GHz boost, effectively unchanged from last year’s Ryzen AI 5 340. Any differences higher up the stack are modest frequency bumps, reinforcing that this is a refresh and rebrand rather than a fundamentally new product lineup.

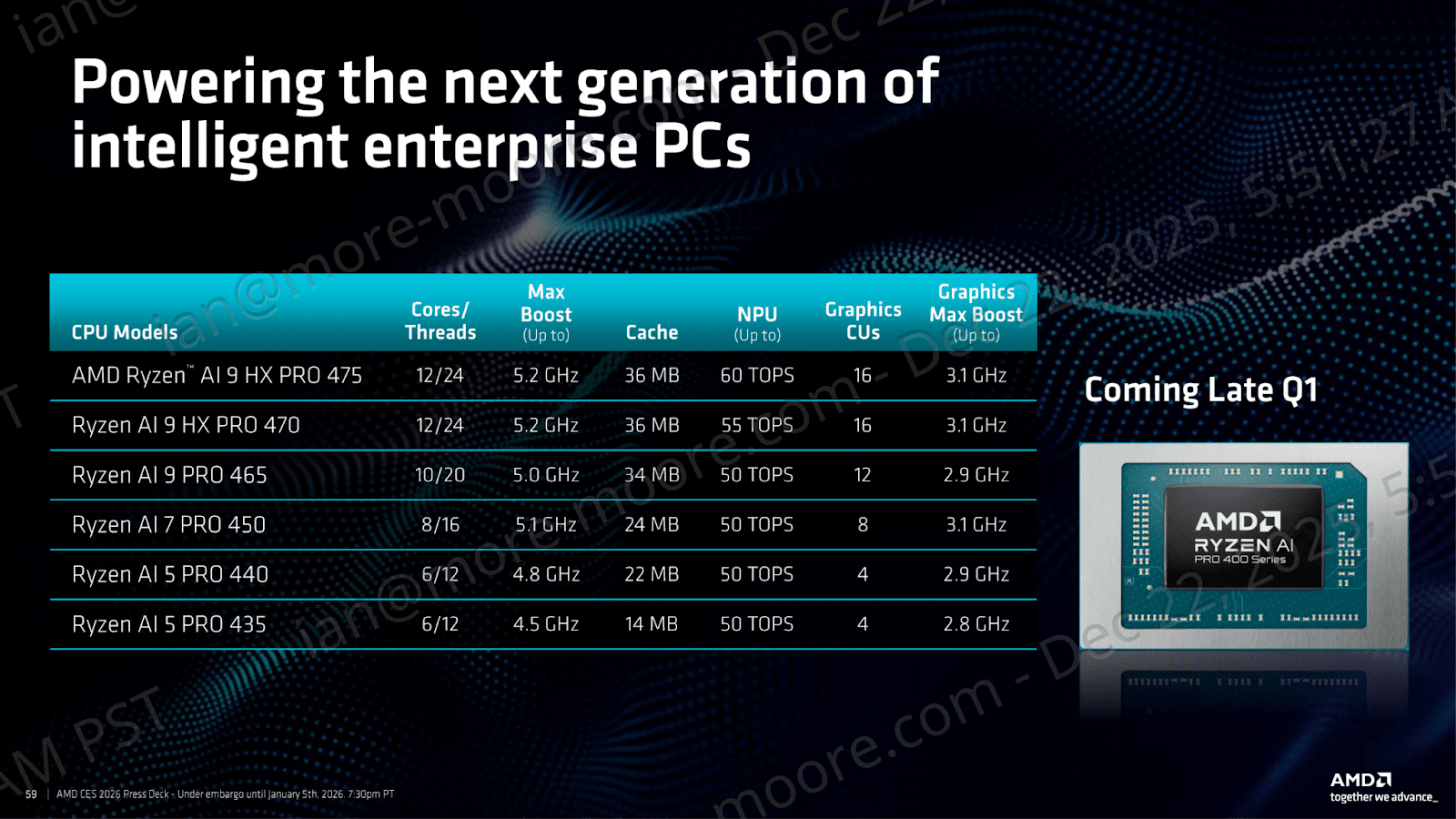

Alongside the consumer refresh, AMD has announced the Ryzen AI PRO 400 series aimed at SME and enterprise deployments. These SKUs layer in AMD PRO Technologies focused on fleet manageability and security, including AMD PRO Manageability and AMD PRO Security features such as Memory Guard. As with prior PRO platforms, many of these capabilities depend on OEM enablement, including support for Microsoft Pluton, which places the practical burden on system vendors rather than AMD alone. The result is a more capable commercial platform on paper, with real-world value determined by how fully OEMs choose to implement it.

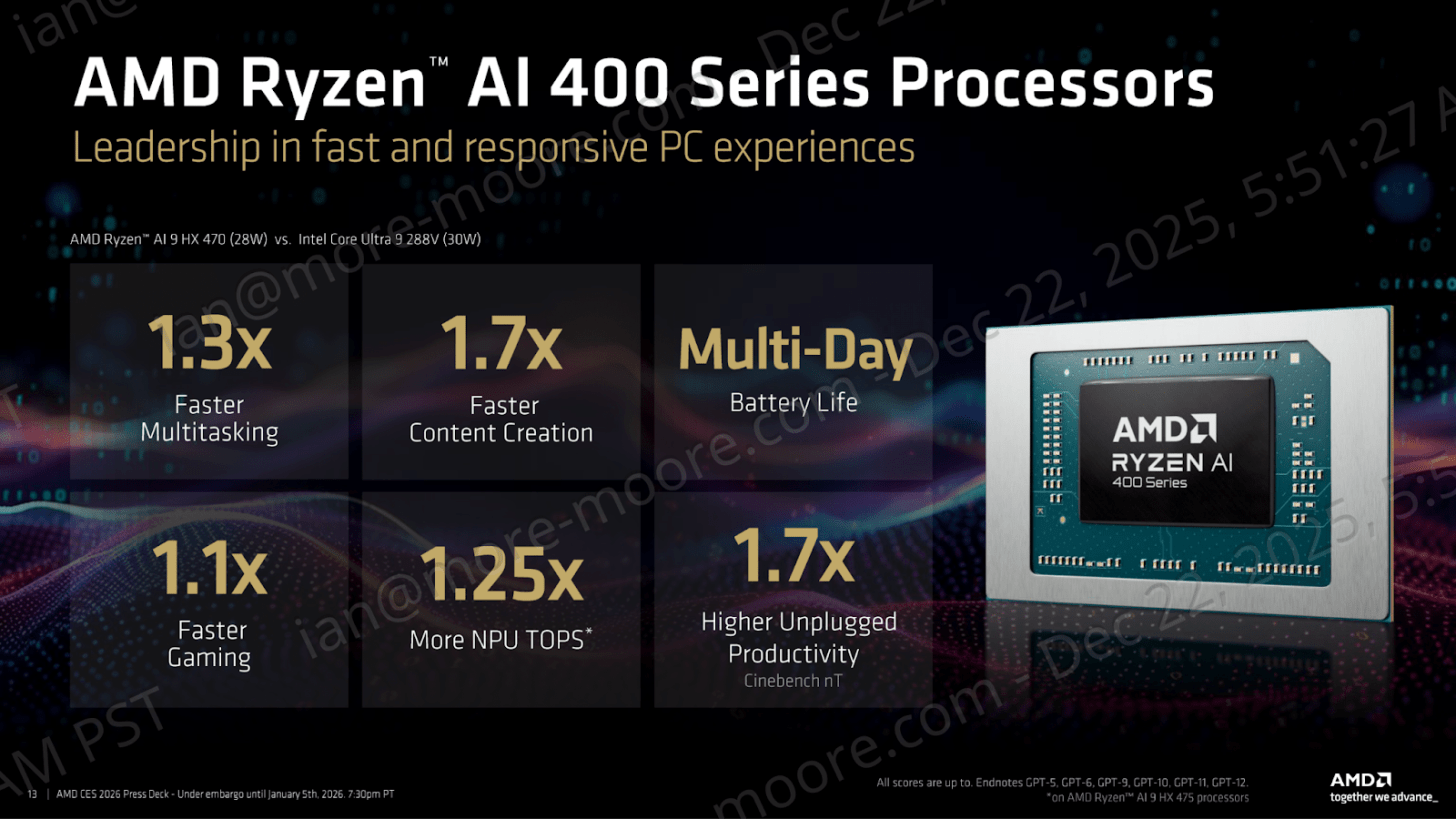

In AMD’s first-party benchmarks, the company compares its Ryzen AI 9 HX 470 against Intel’s Core Ultra 9 288V, highlighting advantages across multi-tasking, content creation, TOPS, and unplugged CineBench performance. As with any internal comparison, these results are selective, and on paper they reinforce the same conclusion seen elsewhere in this launch: this is a straightforward generational refresh rather than a new stack driven by new silicon or a process shift.

It’s much of the same parts for the same segments, but with a higher generational number placed in front. Only the top SKUs, such as the Ryzen AI 9 HX 475, give the launch any substance, a boost in headlines, such as memory support, and TOPs. In terms of power, all of the Ryzen AI 400 and HX series SKUs come with a configurable TDP of between 15 and 45 W, which puts the onus on OEMs and notebook manufacturers to complement the level of power envelope capable from each SKU with their chassis.

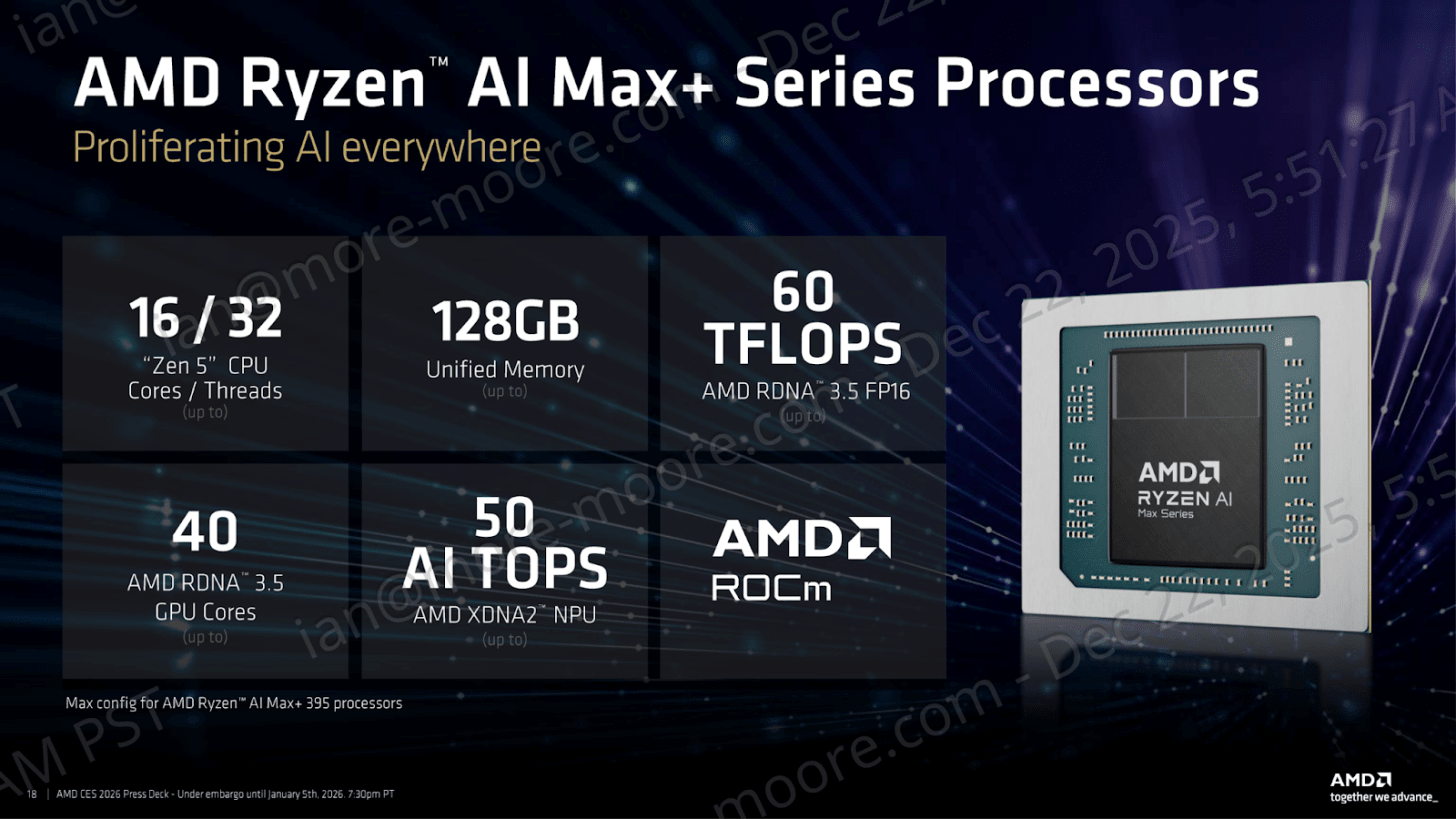

AMD Ryzen AI Max+ Series: A Mini Workstation

If the Ryzen AI 400 series is AMD’s volume play designed for Copilot+ laptops, then Ryzen AI Max+ is the halo tier. AMD frames the product line for local AI development and high-end creation workloads, with the overall pitching leaning on discrete-level graphics performance and workstation-class positioning. Of course, all of these buzzwords read as a mobile creationist’s dream in terms of marketing message, but AMD is delivering Max+ through an APU-like unified design, which, in the context of AMD’s broader story, Max+ is the product series that lets AMD step beyond the typical ticking the AI checklist narrative and pitches something as more aspirational as a product. It’s also worth noting that a ‘big GPU version of the APU’ had been requested by gamers for over a decade.

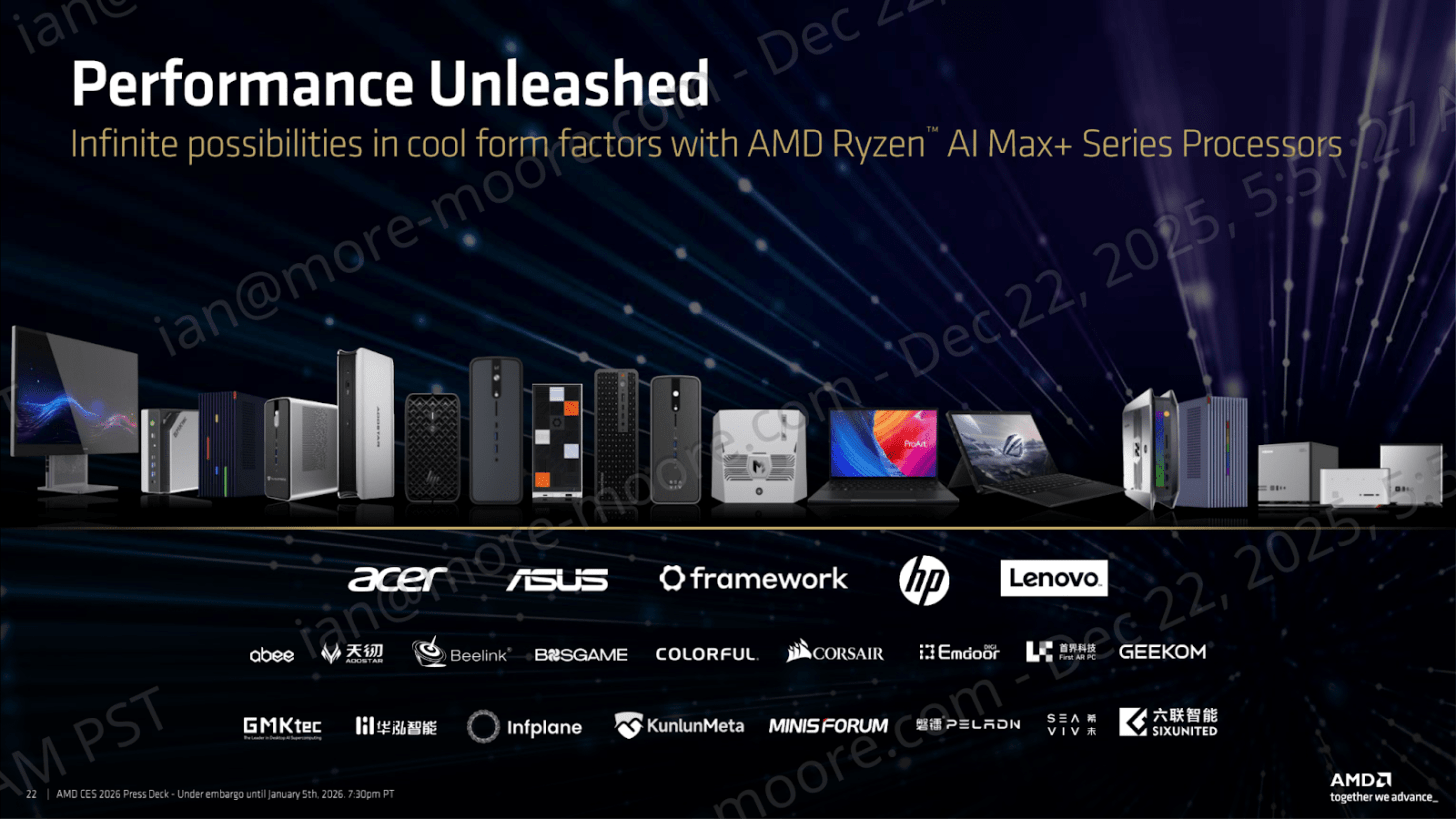

The thing is, last year AMD was hesitant with its Ryzen AI Max+ parts – we saw a HP notebook, a repurposed HP mini-PC design, and a new ASUS ROG Flow X13. All the OEMs seemed hesitant to pick this part up, given the new swim lane for AMD. Fast forward through Q4 2025 and into 2026, and every OEM seems to be chomping at the bit to get as much supply as possible.

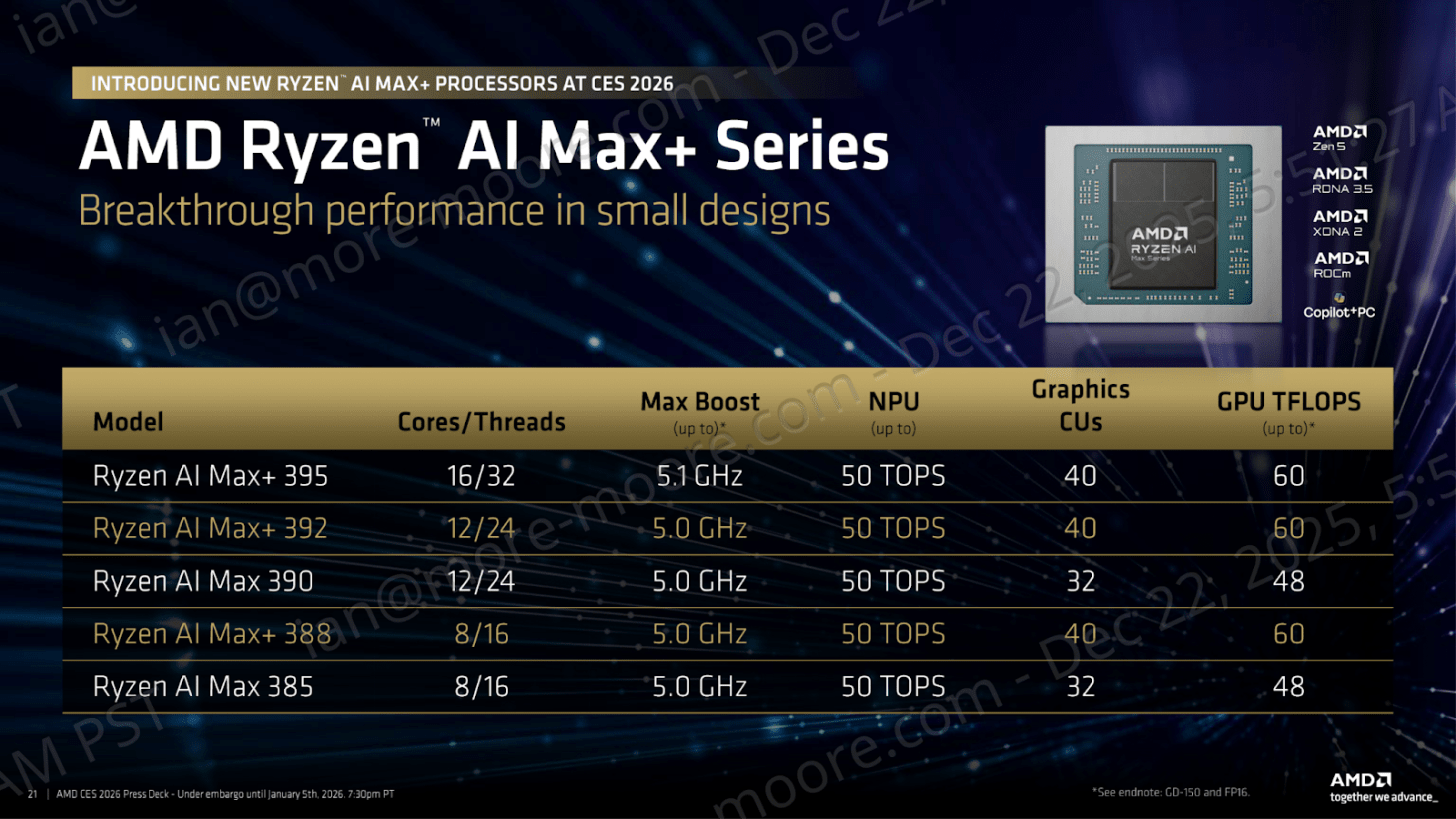

In 2025 three parts were announced – the 395, 390, and 385, each available with 32 GB, 64 GB, or 128 GB of memory. In reality, we saw mostly the top SKU only be afforded the full range, whereas the lower down parts often had only 64 or 32 GB. In order to fill out the line, the Max+ gets a couple of new members – the 392 and 388. The plus in Max+ means it gets the full sized GPU, rather than bin down versions. In this case AMD is binning the CPU cores, not the GPU.

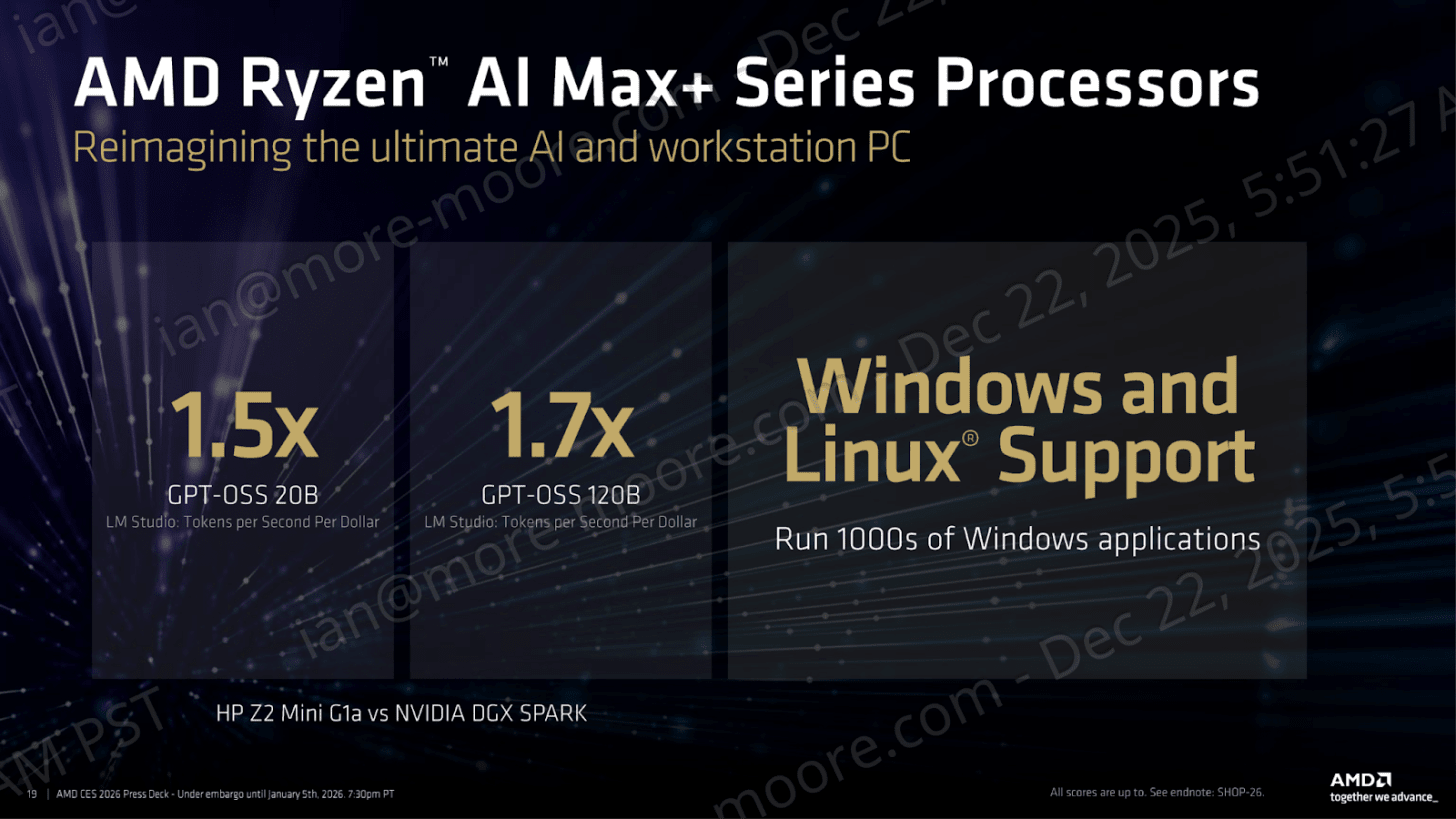

When it comes to in-house marketing figures, a pinch of salt must always be taken when taking claims on face value. What’s interesting here is that AMD is deliberately trying to drag the Ryzen AI Max+ out of the discussion when it comes to run-of-the-mill APU, and aims it squarely at NVIDIA’s miniature AI workstation, the DGX Spark. Firstly, as you can imagine, AMD is opting for a more value-friendly metric to measure performance against the more capable DGX Spark by using a “tokens per dollar” conversion rather than trading blows in pure AI inference horsepower. This is smart from a marketing perspective, especially given the fact that Ryzen AI Max+ can’t compete in a direct performance shoot-out. AMD opts more for offering value vs pure performance with this comparison, and uses two specific AI workloads, GPT-OSS at 20B and 120B, in which it claims 1.5x and 1.7x, respectively, in dollar per token performance.

In 2026, AMD looks to show multiple configurations and designs that OEMs will be implementing AI Max+. This ranges from notebooks to mini PCs, even with an all-in-one PC design encompassing a screen; this shows SKU versatility, but it all comes down to volume and how vendors want to utilize the SKUs in their designs. Whether we see vendors ship the big unified memory variants remains to be seen compared to launch systems, but AMD is clearly signaling that Max+ is meant to be a platform tier that can be scaled across form factors; and it it expects that the mini workstation and mini-PC categories to be perhaps one of its primary homes for the new big iGPU plus unified memory design proposition.

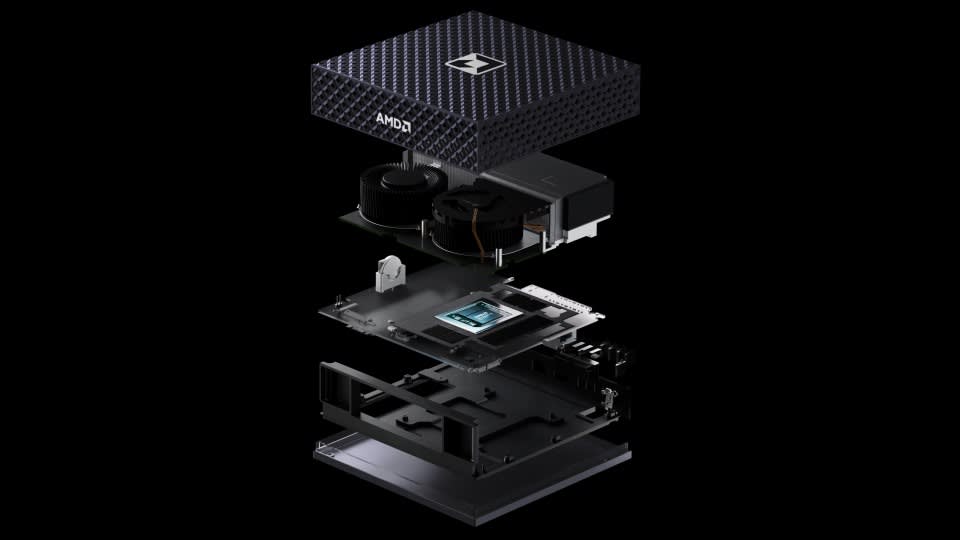

One of the other announcements as part of this is a new ‘Ryzen AI’ workstation. Given the popularity of hardware like the Mac Mini/Mac Studio and NVIDIA’s DGX Spark, rather than showcase a wide array of options from its partners, AMD is building a reference design as a Spark competitor, on Ryzen AI Max+, called the Ryzen AI Halo Developer Platform.

AMD hasn’t provided any details aside from the two images, and we’re expecting AMD CEO Lisa Su to show it off at the CES keynote today (it might already be passed if you’re reading this as we publish). In order to compete with Spark, we’re expecting it to have some form of enhanced networking beyond a 10 GbE port, so perhaps something Pensando based. AMD will no doubt highlight that the fact it is x86 based for AI development would be a big plus for developers. The only other note we got on this in advance is ‘expected in Q2 2026’.

The thing is, one of the key differentiators AMD wants you to focus on with Ryzen AI Max+ is on memory capacity, not the TOPS. AMD is leaning into the idea that Max+ can enable a class of local AI inference and creation workflows by pairing a large integrated graphics configuration with the ability to feed up to 128 GB of unified memory into the equation. Opting for a unified coherent memory structure allows the CPU and GPU to share one memory pool concurrently, and allows access to the same data structures without requiring software to manage things and having to manually keep copies synced. Giving coherence to memory offers a practical upside for the integrated GPU as it can use far more than typical discrete-level graphics VRAM memory capacity; it can draw directly from system memory without specific hard boundaries and the same duplication overhead. There are tradeoffs, as a shared memory pool still comes with DRAM bandwidth and latency, and doesn’t automatically solve such memory bandwidth constraints. After all, unified coherent memory is still DRAM.

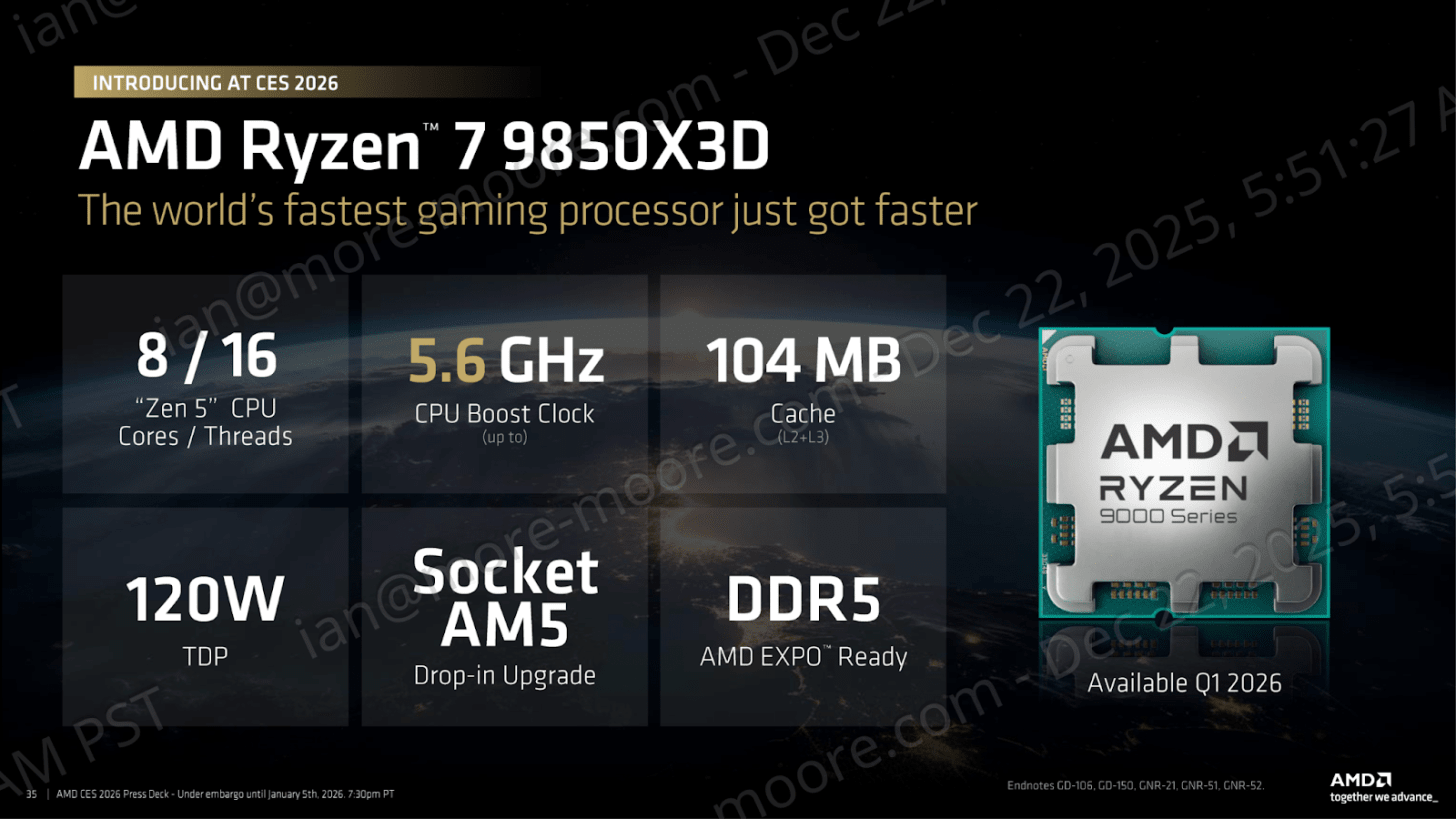

AMD Ryzen 7 9850X3D: A Single New CPU

For consumers expecting anything groundbreaking in terms of development regarding its desktop line-up, AMD announces just one new addition to the AM5 stack: the 3D V-Cache packaged Ryzen 7 9850X3D. This is AMD’s quietest area of its mid-cycle refresh for CES 2026, but it offers users more of a reminder that X3D is its gaming halo rather than shifting expectations in the market. If you zoom out from all of the marketing blitz AMD has provided, it’s a classic shelf management move rather than platform progression; we didn’t expect AMD to have much to offer in terms of desktop given the Zen 6 update is still a way away, and it shows in this smaller announcement.

The AMD Ryzen 7 9850X3D is using better binning to give a 400 MHz boost to core clock boost speeds over its other 8C/16T X3D Zen 5 chip, the Ryzen 7 9800X3D part, but it’s more than likely repurposing defective higher core count parts that have perhaps had cores disabled on parts that couldn’t successfully be validated to specification, such as the Ryzen 9 9900X3D.

Despite this, the Ryzen 7 9850X3D offers up to 5.6 GHz of boost, which puts it on par in terms of speed compared to its higher core count SKUs, such as the already mentioned Ryzen 7 9800X3D and the flagship Ryzen 9 9950X3D. It does, however, have the same 96 MB of L3 3D V-Cache (64 MB of L3 cache sliced and packaged on top of the default 32 MB), with 1 MB of L2 cache per core and drop-in support for AMD’s current AM5 platform.

From my standpoint, this is AMD leaning on its lowest risk lever: X3D for gaming. X3D remains one of the few CPU-side implementations that can move the needle in gaming more than other components outside of a direct discrete graphics upgrade. With a quiet cycle on desktop, which is likely to carry on until at least the summer, AMD is simply trying to keep its AM5 platform relevant in the current ecosystem, even with memory prices the way they are. Even if it was time for a platform update, you could imagine most of the discussion would be about memory pricing anyway.

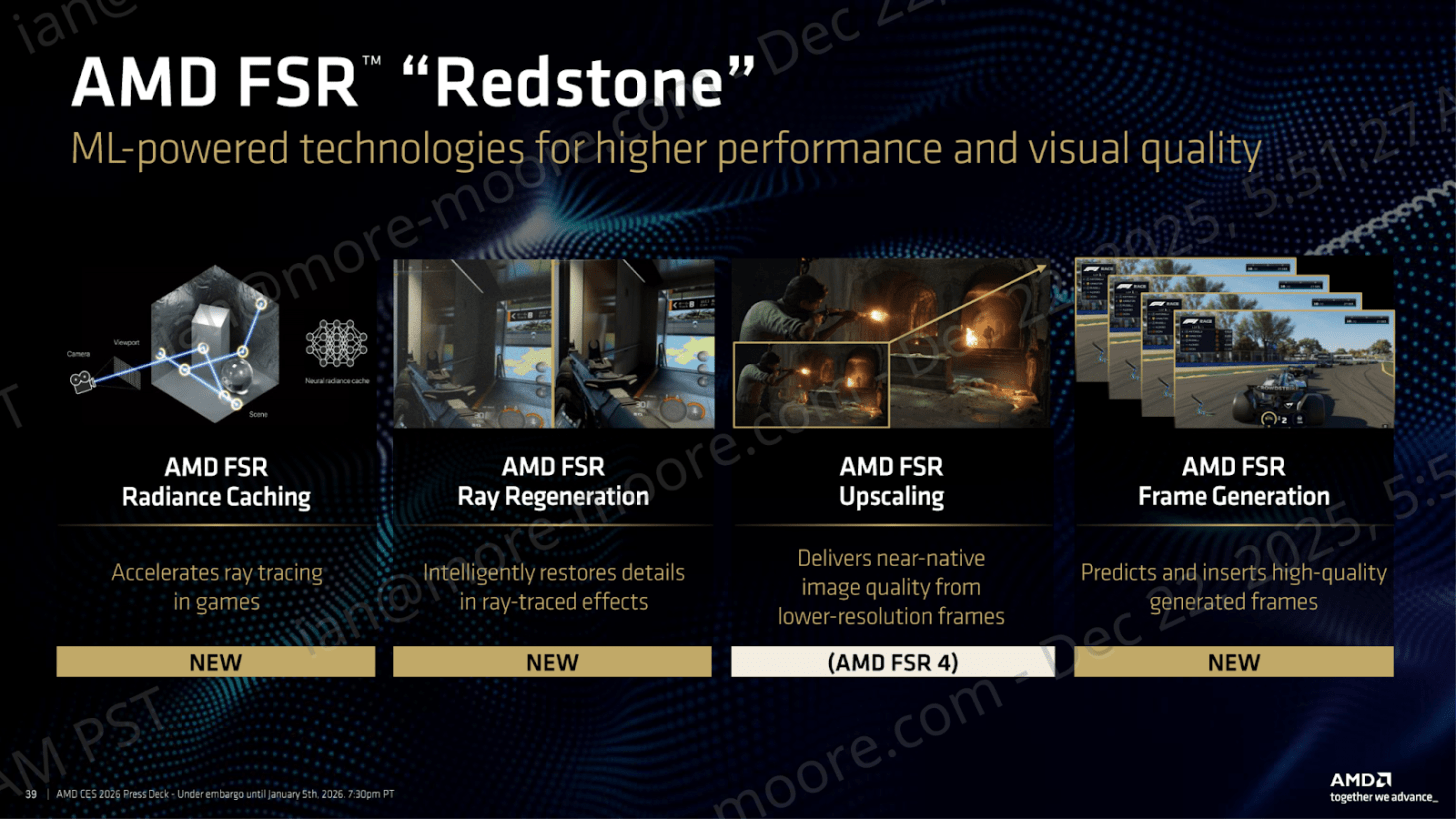

AMD Graphics: Framing for FSR “Redstone”

Moving on from desktop, we have FSR ‘Redstone’. What FSR Redstone actually brings is an attempt to make the GPU story feel like an evolution of its graphics platform without requiring any new SKUs to debut. It’s all about software optimization for gaming rather than launching new silicon. AMD isn’t selling new GPUs, but rather its ongoing optimizations, squeezing out performance for its current-gen Radeon RX 9000 series of discrete graphics options. AMD’s primary marketing focus for Redstone at CES 2026 is the Radeon RX 9070 XT graphics card, with this particular model acting as AMD’s vehicle to deliver and showcase the improvements brought to the table by Redstone.

Rather than announcing anything new in terms of graphics, AMD is elaborating more on what FSR Redstone is and brings to the table in a more detailed way. AMD’s FSR Redstone isn’t just one specific feature, but a combination of characteristics to provide a more holistic solution for gamers. Redstone is based upon four primary pillars, including FSR Radiance Caching, which accelerates ray tracing performance, as well as FSR Ray Regeneration, which reconstructs the detail in scenes with ray-traced effects. The other two pillars include FSR Upscaling, which is explicitly branded as FSR 4, which is designed to deliver near native image quality from lower resolution frames, with FSR Frame Generation predicting frames between the rendered ones and providing interpolated frames, which increases the perceived smoothness and raises the delivered frame rate.

Viewed at a platform level, Redstone is AMD formalizing a hybrid rendering pipeline where raster performance is no longer the only element that matters. The important point to consider with AMD FSR Redstone as a solution is that it is leans more towards reconstruction as the default path to offering more performance with upgraded visuals; AMD is effectively admitting here that the route to playable 4K games with ray tracing is not just brute force raster, but leveraging a stack of algorithms. This is being driven by ML-assisted frame generation trading native rendering for better output. It kind of concedes to what the industry already knows: that playable 4K with ray tracing is increasingly a reconstruction problem, not a raw shader throughput problem. AMD FSR Redstone’s job is to keep the Radeon RX 9000 series competitive through software coverage and developer adoption during a quiet GPU cycle.

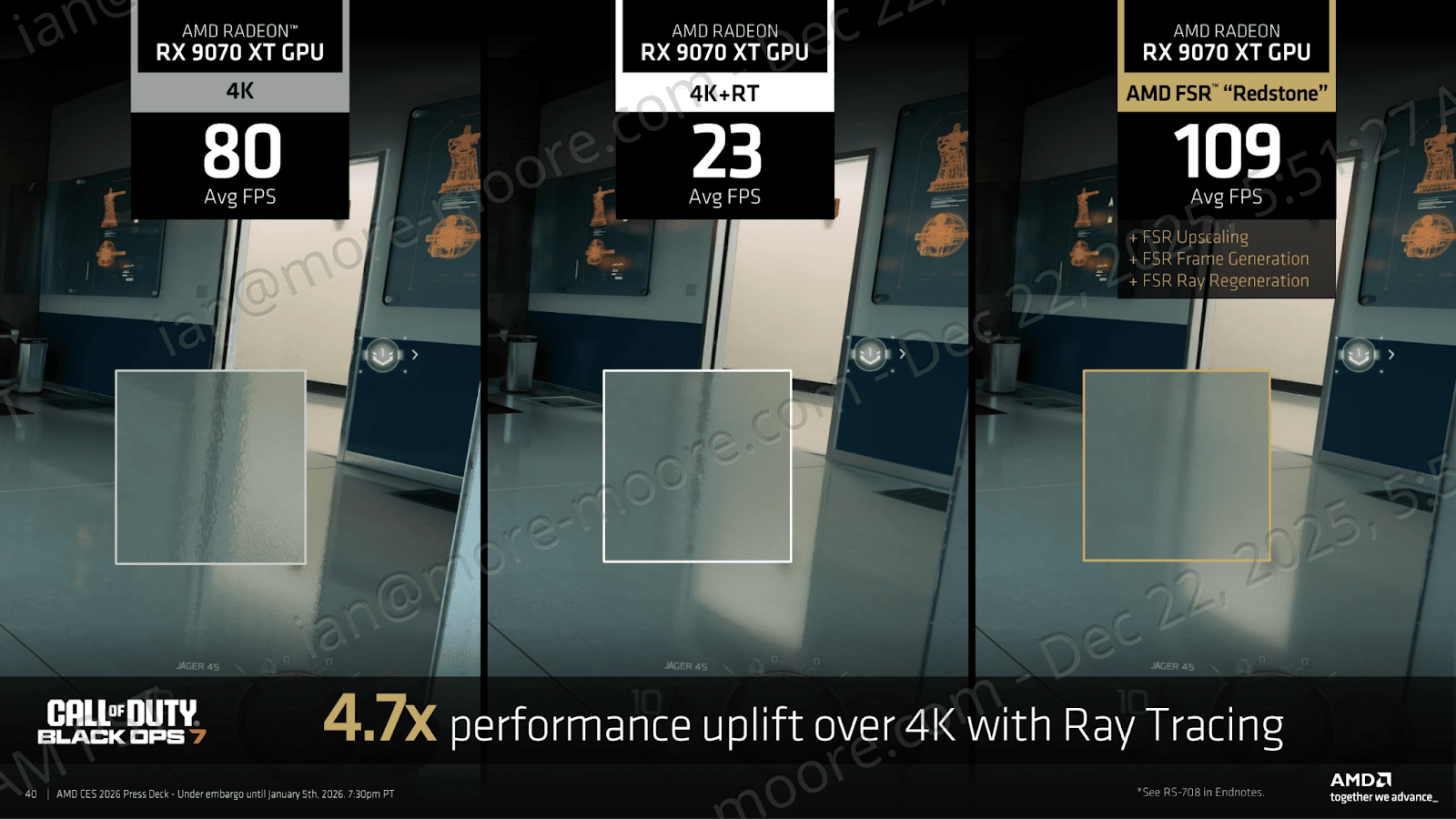

AMD’s pitch for FSR Redstone is presented in a slide using Call of Duty Black Ops 7 and the Radeon RX 9070 XT to showcase the performance gains on offer. AMD shows 80 frames per second at 4K without ray tracing enabled, with a very collapsed and sluggish looking 23 frames per second once ray tracing is enabled. With AMD FSR Redstone enabled, they claim a boost to framerates of 109 fps at average, and by layering upscaling, frame generation and ray regeneration, AMD claims an uplift of 4.7x at 4K with ray tracing enabled; this looks solid enough.

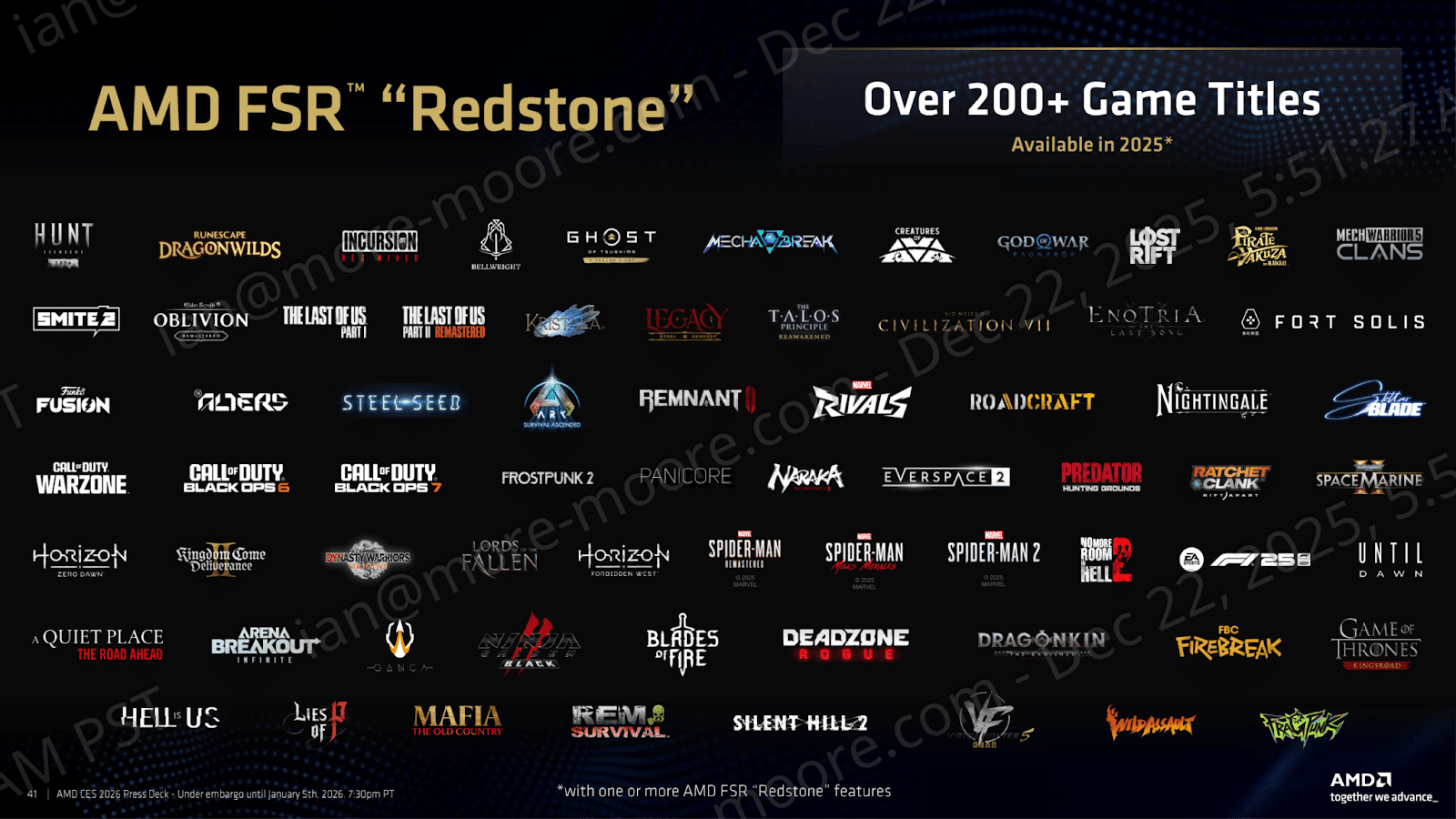

AMD does include a slide showing what looks like a simple market brag on adoption of FSR Redstone, because nothing kills a software-led graphics pitch quicker than low availability across the gaming ecosystem. The small print here however is very telling, as the over 200+ game titles pitch gets a little deflating considering that a lot of titles benefit from ‘one or more’ of the Redstone features, as opposed to the entire stack of improvements and enhancements. That distinction is important, because it means real-world outcomes are going to vary game to game and depending on whether a developer itself ships upscaling, or properly integrating the ray reconstruction parts of the stack.

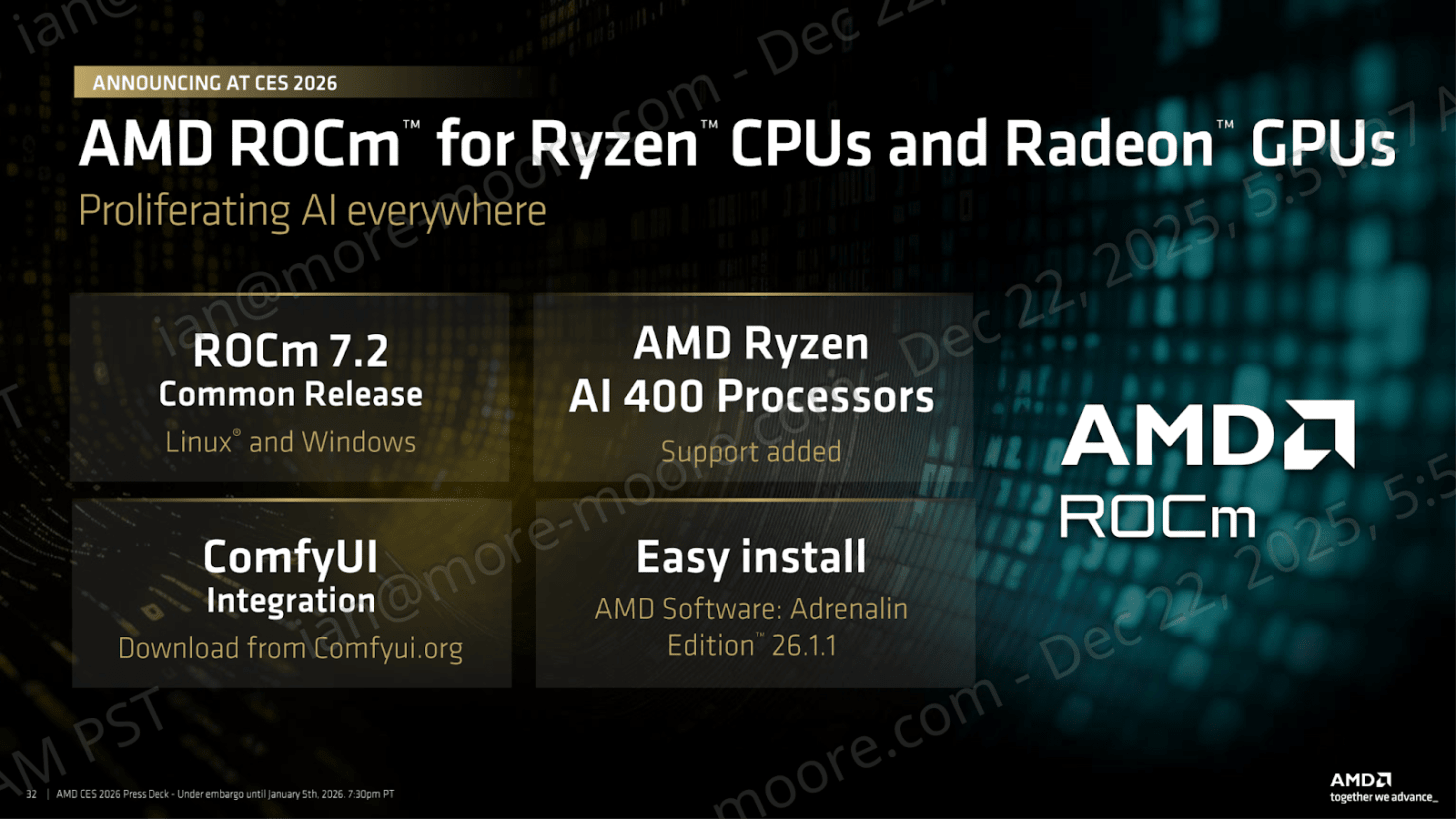

Software: ROCm

If FSR Redstone is AMD’s gaming proof point that its software can move the needle in a very thin and quiet GPU news cycle, then ROCm is basically the same from the playbook, this time pointed at local AI. AMD is explicitly trying to frame ROCm more directly than previously, with ROCm 7.2 being positioned as a single and common release across both Windows and Linux. It also frames another message within its marketing. This is especially true surrounding support for the refreshed Ryzen AI 400 processors. This leans more into giving credence to ROCm being somewhat new during AMD’s mid-cycle CES 2026 announcements. This is because ROCm has been given a lot of column inches and has made ground within the industry in recent times in notability. AMD is tying ROCm directly to the new mobile stack and leans into Windows as a first-class target as opposed to being a side quest for them.

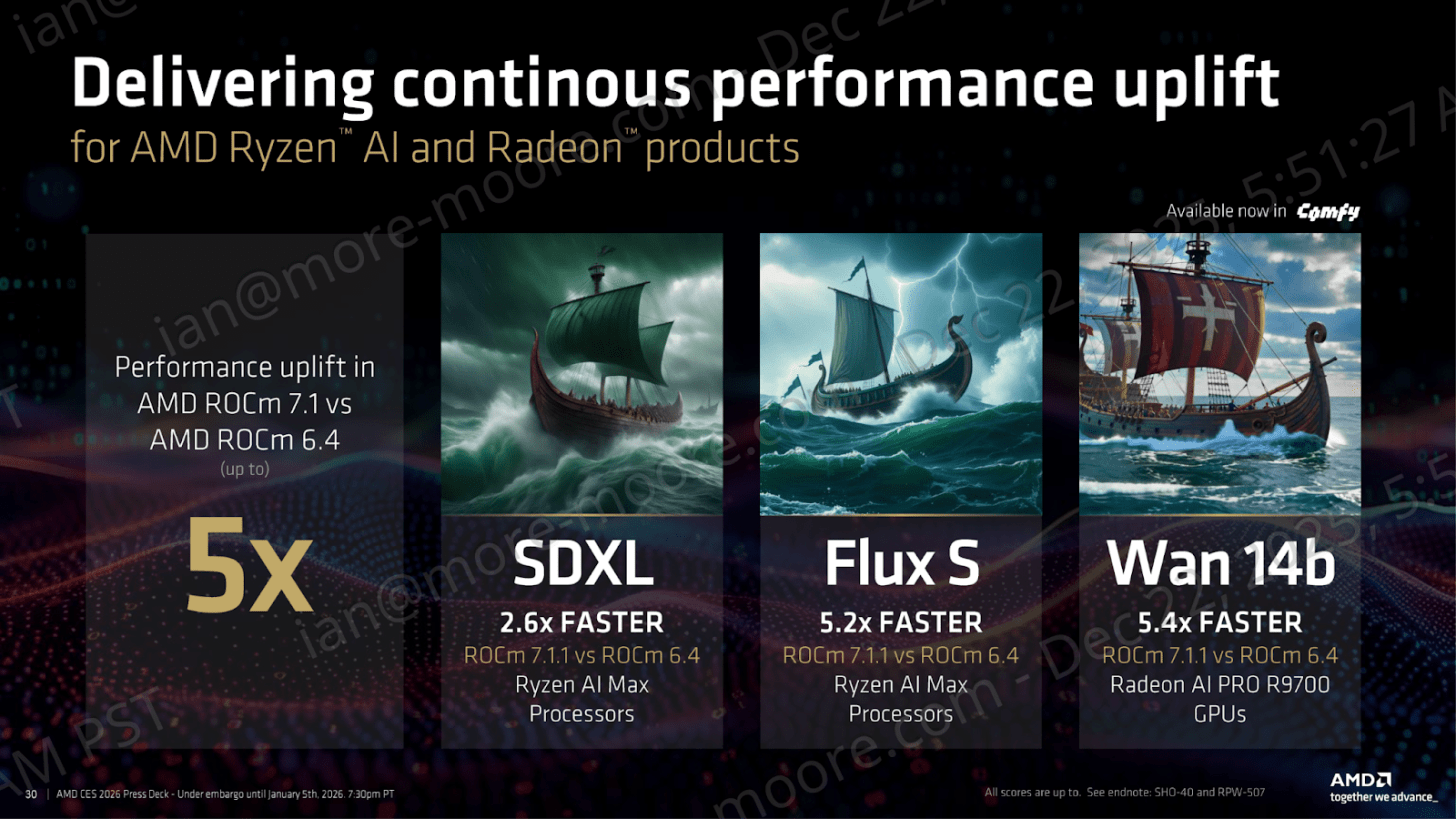

AMD backs this positioning with kind of a ‘continuous performance uplift’ flavoring as you would expect from a software led narrative. Here AMD frames ROCm as showing repeatable gains across both the Ryzen and Radeon product stack rather than a static looking toolkit. AMD claims up to a 5x uplift in ROCm 7.1 compared to ROCm 6.4, illustrating the point with examples such as SDXL and Flux S. In these cases, AMD highlights multi-fold gains on both Ryzen AI Max processors and Radeon AI Pro GPUs, reinforcing its message that ROCm is an actively improving software stack where performance advances arrive through iteration rather than new silicon.

One important part to pull out here is that AMD is deliberately using popular and recognizable generative AI based workflows acting as proof points to get its messaging across. AMD even flags that ComfyUI integration is available now and is downloadable from the source, which AMD looks to be dragging ROCm out of the abstract and into tools people can actually touch. It’s worth noting that AMD’s performance improvement metrics are ‘up to’ and not definitive gains on specific hardware with varying versions of ROCm, which makes things sensitive to configurations. It’s clear where AMD is investing in itself, but it doesn’t guarantee that every model or workflow will see the same jump in performance. This somewhat devalues the exact multiplier of performance and is more an implication that ROCm is being a living performance layer that AMD continues to improve across both its integrated and discrete AI capable hardware.

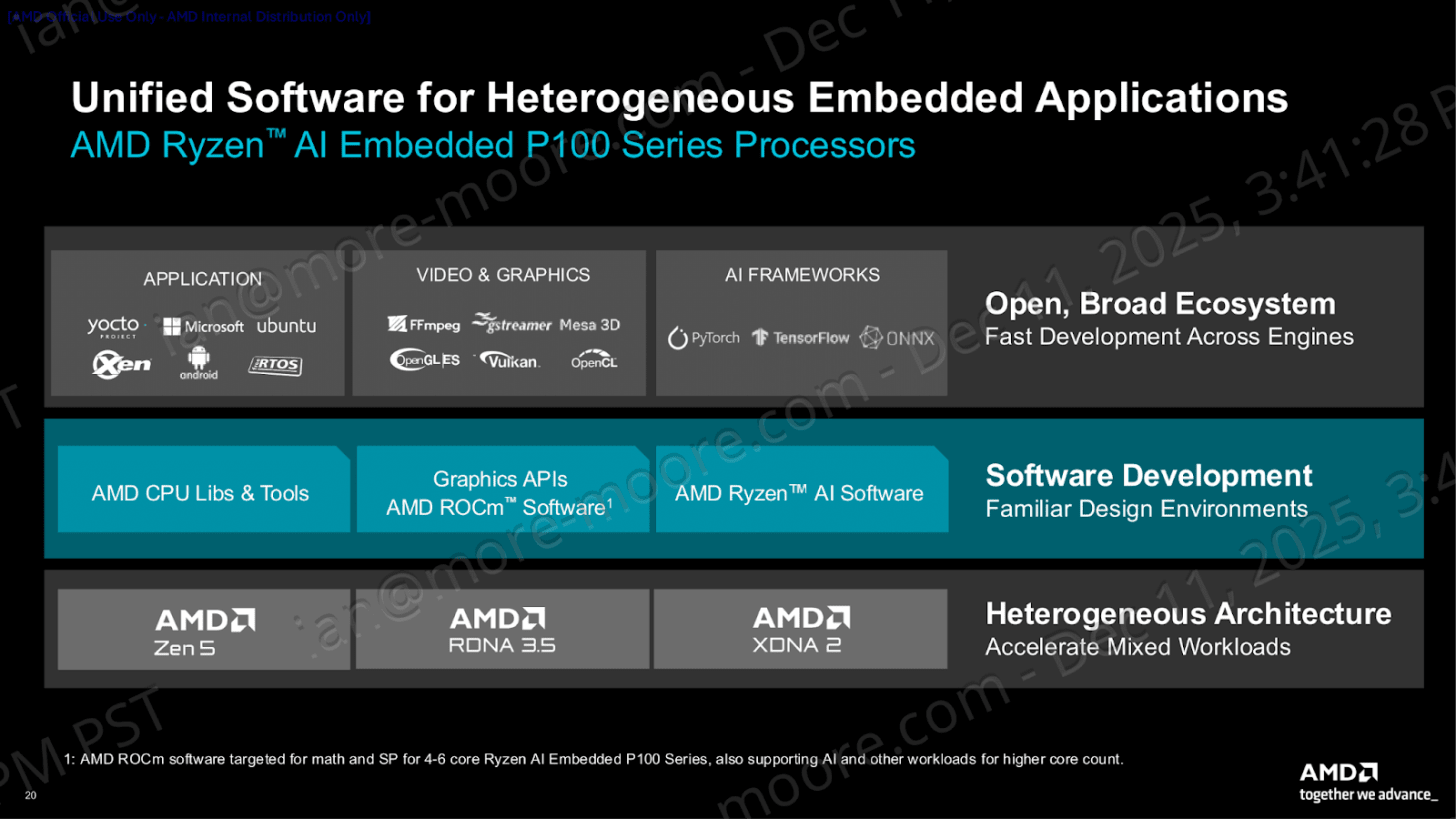

AMD Ryzen AI Embedded P100 Series

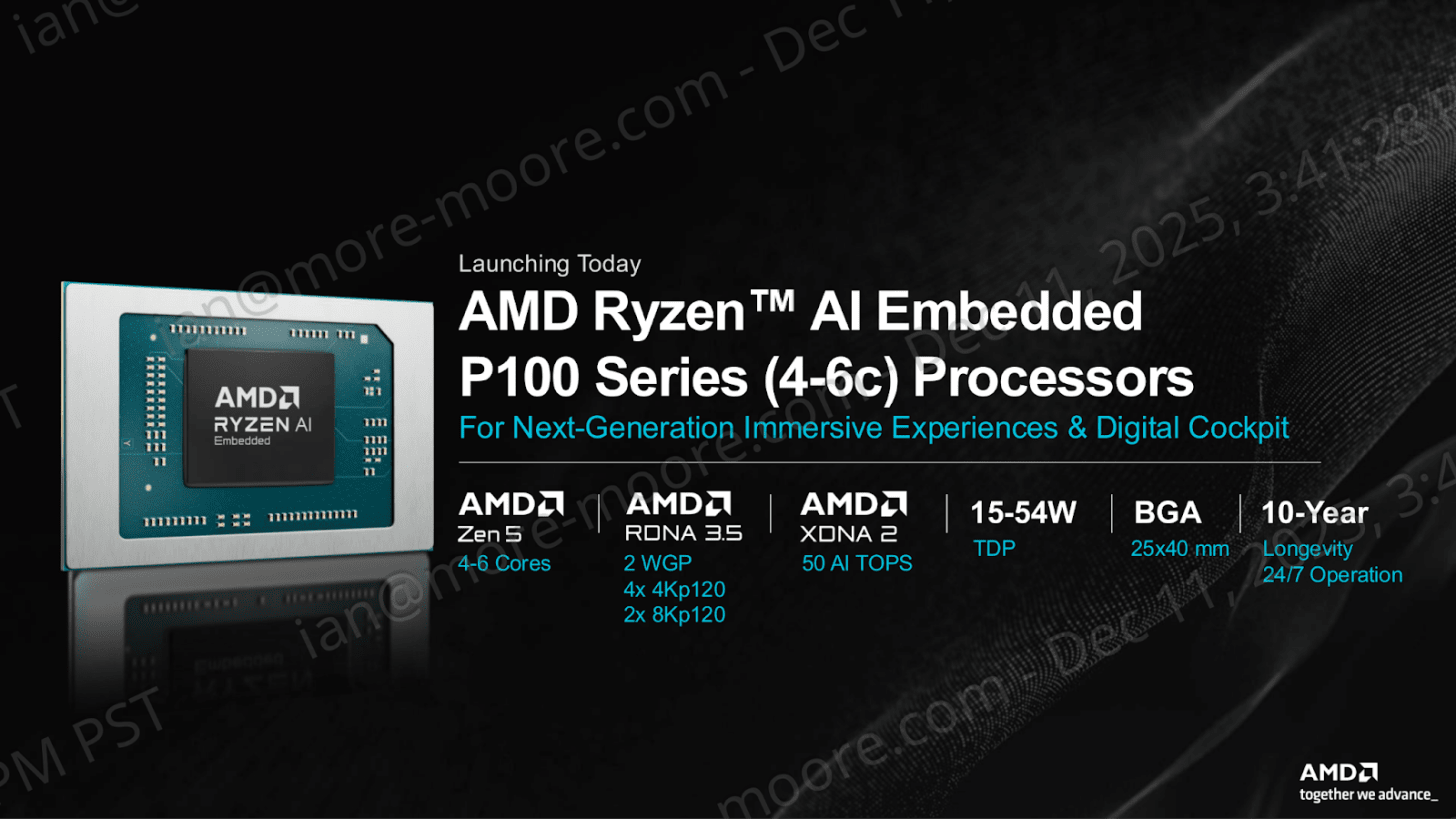

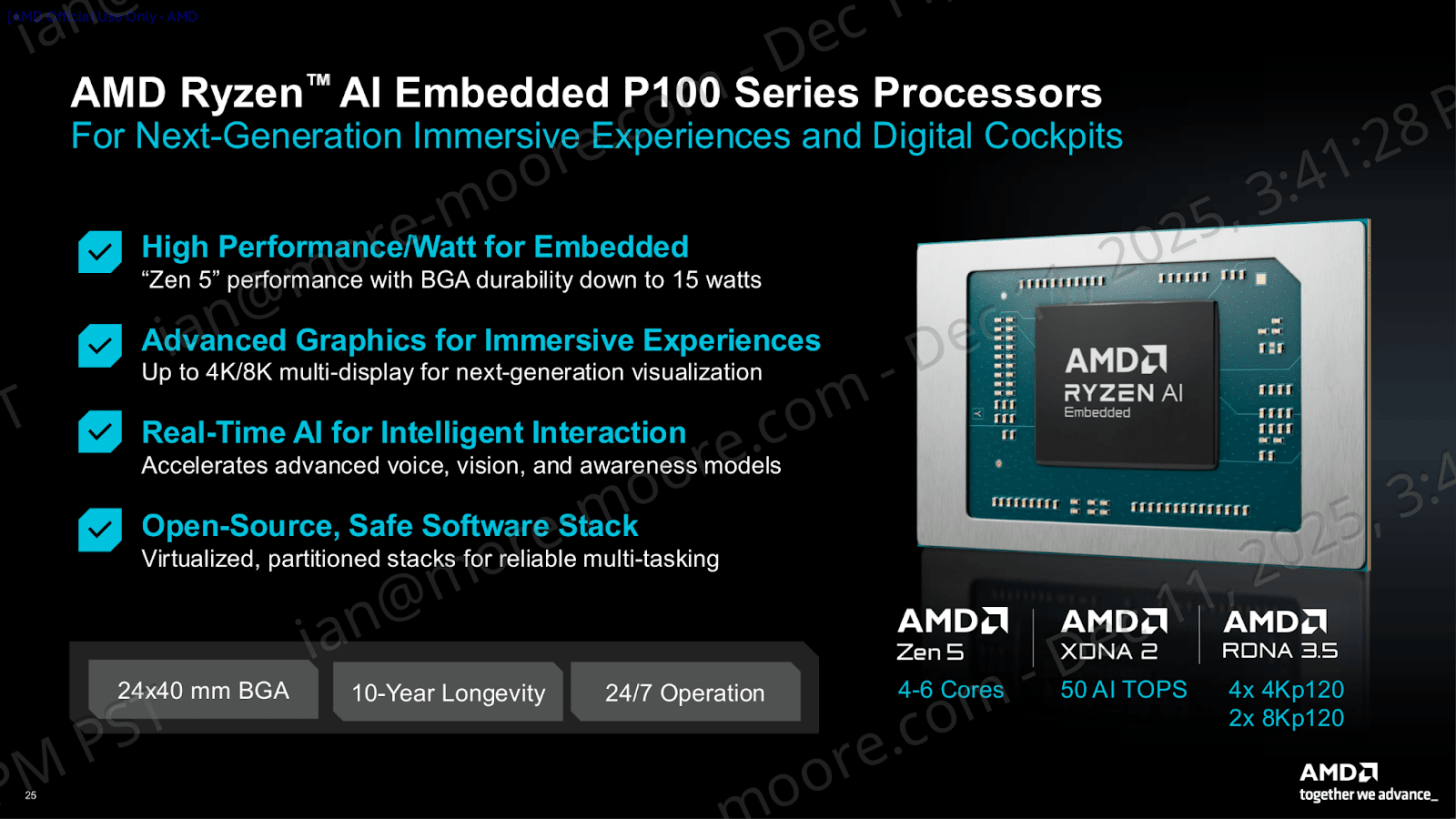

AMD is also carving out its Ryzen AI Embedded tier within its portfolio with the P100 series at CES 2026, which is positioned as a full APU solution similar to what we’ve seen before. The P100 series offers a small list of SKUs with a holistic solution for embedded products, with a CPU that can run the control plane, the GPU running the visuals, and an NPU handling all of the AI bits while ticking all the boxes that actually matter at the Edge. Also automotive.

The P100 family is framed as the next generation for immersive experiences and digital cockpits, with SKUs ranging from four to six cores, with the six cores featuring up to 50 AI TOPS and scaling from 15 W to 54 W TDPs on a 25 x 40 mm BGA package designed for 24/7 operation and 10-year longevity.

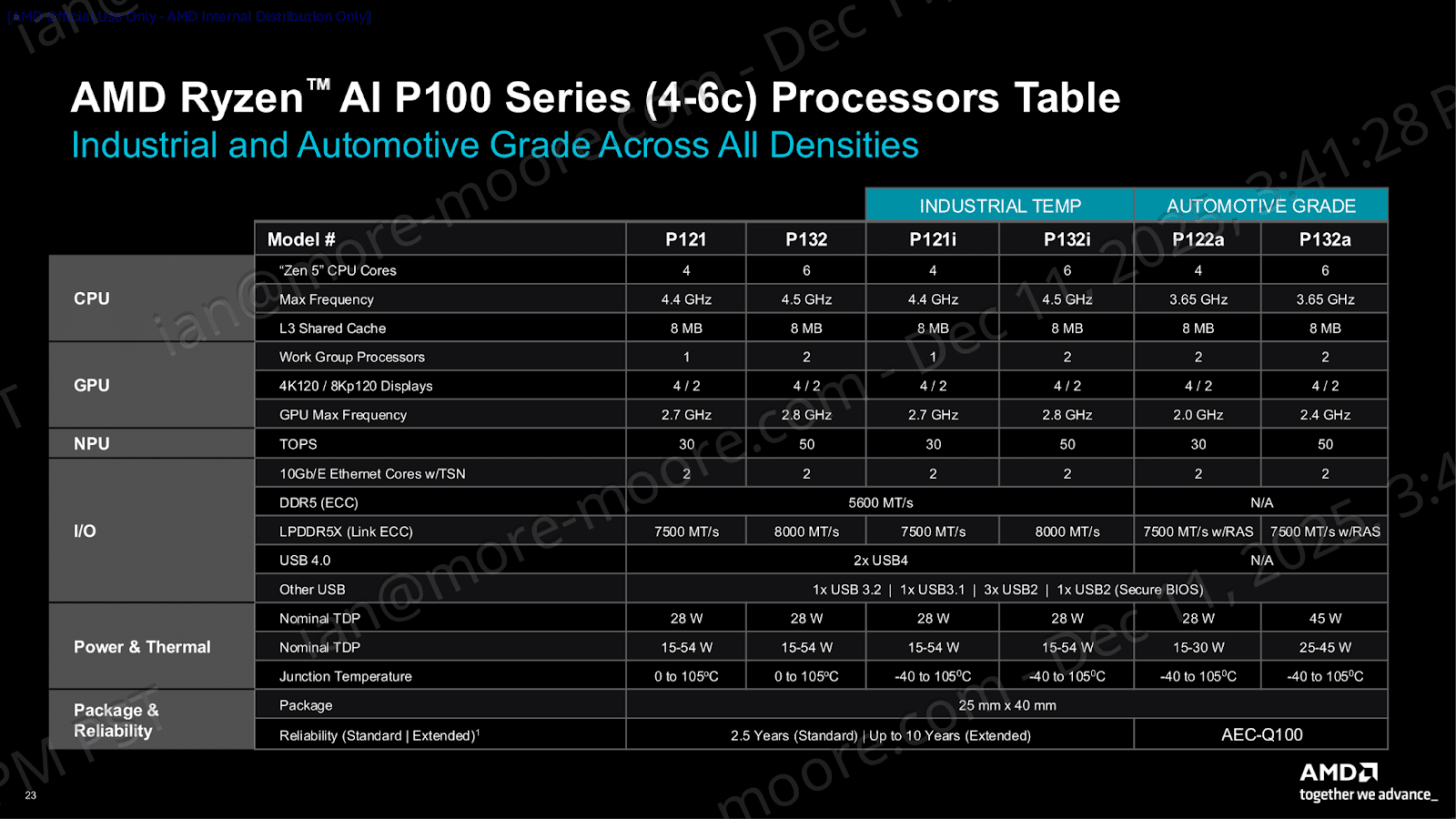

AMD is explicitly launching the Ryzen AI Embedded P100 series (4 to 6 cores) as an embedded APU tier of chips, with its marketing being clear on that it’s a consolidated CPU/GPU/NPU platform rather than segmented discrete components. Focusing on the small but defined SKU list, the SKUs themselves are segmented into different markets. This includes the P121 (4c) and P132 (6c), designed for Industrial temp, the P121i (4c) and P132i (6c), designed for industrial extended temp, and two SKUs for automotive, the P122a (4c) and P132a (6).

The 4-core parts themselves top out at 30 TOPS on the NPU, while the 6-core parts scale higher with up to 50 TOPS on offer. The graphics configuration follows the same segmentation logic: the 4-core industrial parts sit at 1 WGP, while the 6-core parts and both automotive SKUs step up to 2 WGP. On the I/O side, AMD rolls out dual 10GbE networking.

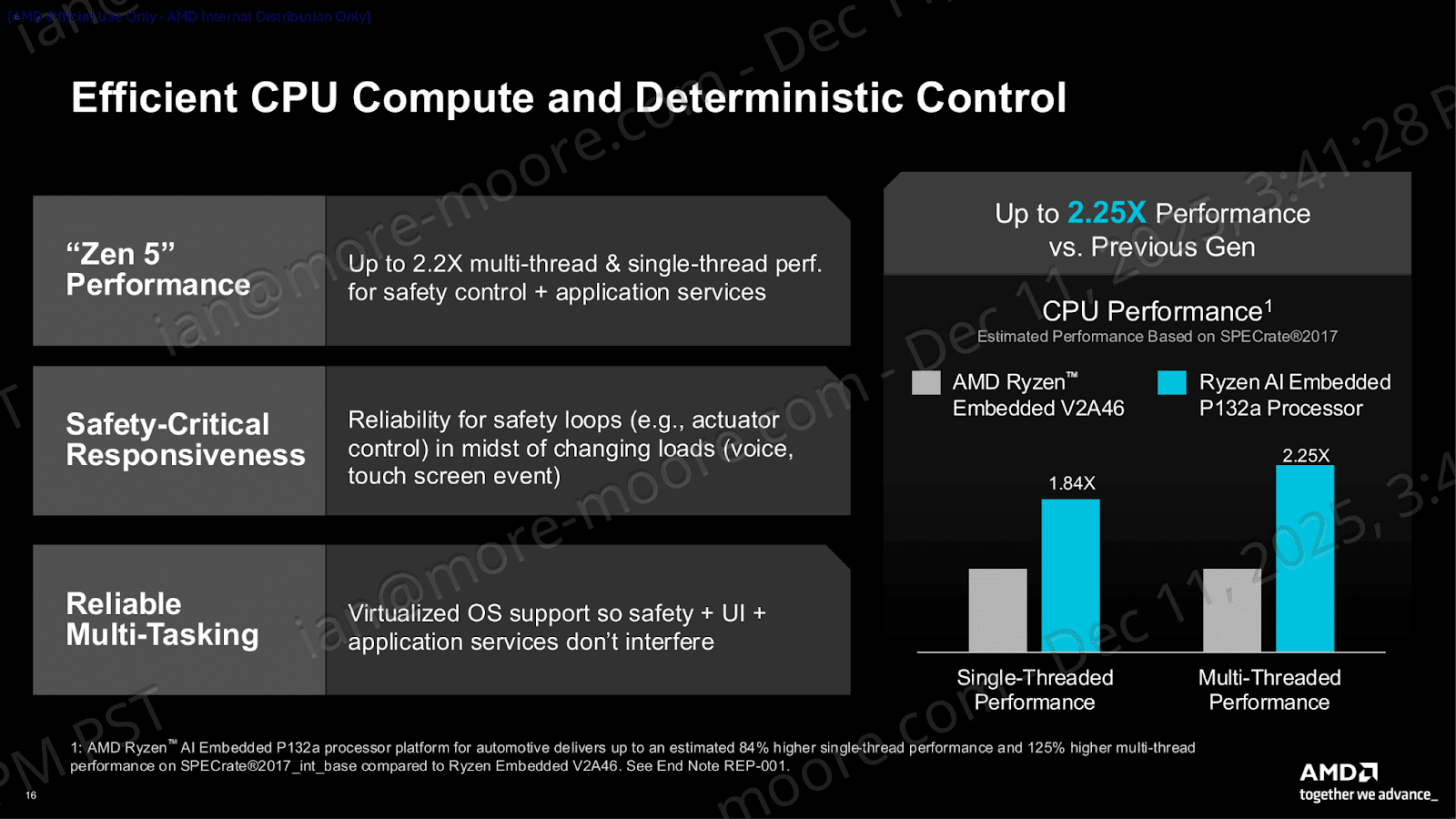

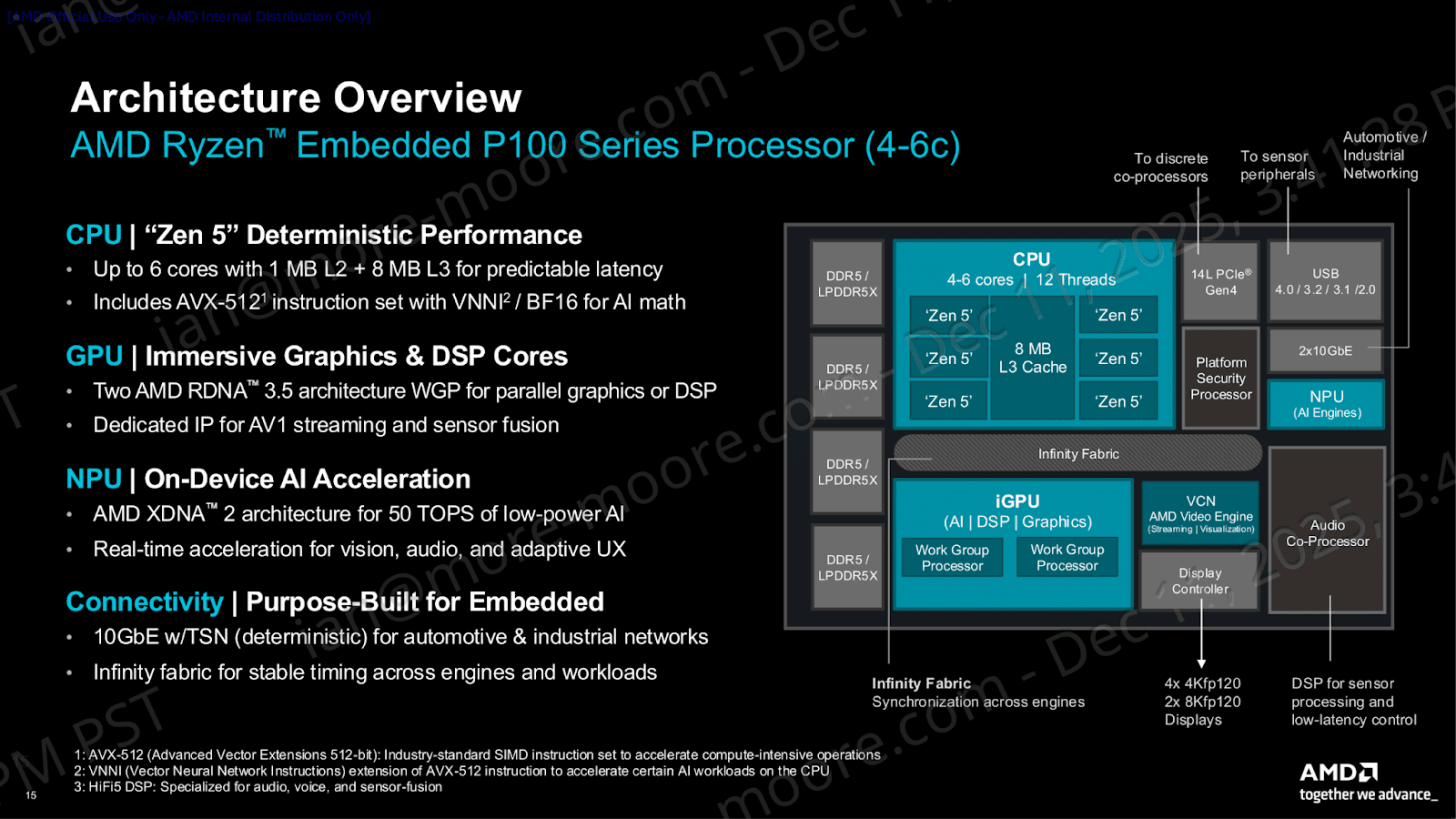

The P100-series architecture is explicit in its intent. This is not a consumer-style “edge AI” chip, but a client-class APU reworked around embedded priorities such as determinism, predictable latency, and I/O. The CPU uses Zen 5 with up to six cores and a defined cache hierarchy, alongside AVX-512 and VNNI/BF16 support, with workloads balanced sensibly between the CPU and the XDNA2 NPU.

The GPU block is RDNA 3.5, but AMD pitches it as “immersive graphics and DSP cores,” leaning into parallel graphics or signal processing alongside dedicated IP for AV1 streaming. The NPU is based on XDNA 2 at up to 50 TOPS, with emphasis on real-time vision, audio, and adaptive UX rather than just fancy headline model demos. Other notable inclusions are TSN-capable 10GbE, PCIe Gen4, USB, platform security, and a display controller all interconnected by Infinity Fabric, which makes P100 viable as a consolidated compute node instead of just a pile of discrete parts.

AMD’s marketing ties software stacks such as ROCm into its P100 series Embedded product nicely together. It feels as if AMD is trying to prove that the P100 isn’t just a neatly combined CPU+GPU+NPU bundled package, but something that can actually be shipped without any software headaches. The key messaging seems to be ‘unified software for heterogeneous embedded’, with AMD leaning on familiars such as Yocto, Ubuntu, Android, RTOS, Xen, with support for mainstream media stacks such as FFmpeg, Vulkan/OpenCL etc. CPU libraries and tools for Zen 5 ROCm and graphics APIs for RDNA 3.5 as well as Ryzen AI software for XDNA2 as well. The overall subtext is that AMD is reducing integration pain with this platform launch as opposed to just throwing out more TOPS.

The final slide of AMD’s Ryzen P100 series Embedded press deck headlines the features on offer from P100 are exactly what should be expected for digital cockpits and even industrial HMI: performance per watt down to a 15 W 24x40mm packaged BGA, with multi-display capability for up to 4 x 4k or 2 x 8k displays. It even offers real-time AI acceleration pitched for voice, vision, and awareness. AMD rounds things out with common credibility markers that buyers expect, such as 24/7 operation, 10-year longevity, and an ‘open-source’ software stack built on virtualization and partitioning. This means mixed workloads can coexist without disregarding system reliability.

Conclusion

Piecing everything together and summing things up for AMD’s roll out of announcements at CES 2026, it’s basically a mid-cycle refresh of existing silicon and technologies, but with some pretty interesting things being added. The biggest AI play for AMD, aside from the new laptop updates, is the introduction of Ryzen AI Halo, which looks to frame their silicon as a contender in the mini workstation market, much like where DGX Spark operates, but with better compatible Windows and Linux software stacks, and better value. The addition of 128 GB of unified memory on the platform is welcomed and does alleviate some of the memory bandwidth restrictions of VRAM on integrated graphics; the slight issue here is, more memory (DRAM) comes at a much higher cost given what’s going on in the RAM market on the whole; DRAM is more expensive as a result of AI gulping up memory allocation, so pivoting it primarily on value vs the competition loses a little bit of bite.

There’s enough to make a bit of noise and keep Zen 5 relevant with the Ryzen AI 400 series refresh, especially Ryzen Max, but if AI isn’t your bag, there’s little much in the way of anything new to satisfy demand; one desktop SKU addition doesn’t really do much overall to bolster things on desktop. The real through-line that AMD is trying to sell the portfolio as a product is rolling in multiple areas of software, doing the heavy lifting rather than leaning on hardware launches. Redstone is the gaming-facing version of this, leaning on reconstruction and ML-backed features to make ray tracing and higher resolutions feel more viable without anything in the way of new GPU SKUs. ROCm is the AI-facing version of this, where the new is less about benchmarking claims and aims it as a first-class Windows-ready accessible stack. Even the Embedded P100 announcement fits the same kind of template; take the same compute blocks and package them for markets that care about things like determinism, lifecycle, and qualification above much else.

Now, we’re also working on the enterprise coverage as well. AMD gave some details about their MI455X GPUs and Helios rack-scale, so stay tuned for that.